Fix Your Broken Customer Service

Customers hate your AI support? Let’s fix that. A technical guide to smarter chatbots, CRM integration, and seamless AI-human handoffs.

A Technical Guide to Optimizing AI-Powered Systems

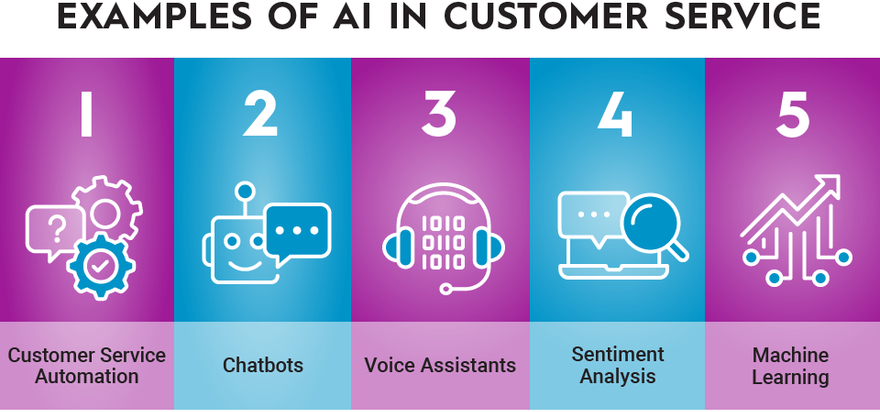

You know the feeling. Your customer service chatbot promises 24/7 support, but it’s stuck in a loop asking, “How can I assist you today?” for the fifth time. Your hold times balloon during peak hours. Your CRM shows conflicting data across departments. You’re not alone—52% of companies report degraded customer service after implementing AI tools (Gartner, 2023). At 1985, we’ve rescued over 30 client systems from this chaos. Here’s how to turn your AI-powered service from a liability into a revenue driver.

The AI Customer Service Trap: Diagnosing Why Your System Fails

Symptom 1: The “Lost in Translation” Chatbot

Most chatbots fail because they’re built on shallow NLP models. They parse keywords but miss intent. A telecom client’s bot kept routing “I can’t pay my bill” to sales teams because it flagged “pay” as positive intent.

Technical Root Cause:

- Over-reliance on rule-based systems: Legacy regex patterns fail with regional dialects (e.g., “top up” vs. “recharge” in mobile plans).

- Lack of contextual memory: Most systems reset context after 3-4 messages, forcing users to repeat themselves.

Insight:

Chatbots trained only on support tickets miss colloquial language. A UK retailer discovered 19% of users typed “me parcel” instead of “my package”—a gap their NLP model didn’t cover.

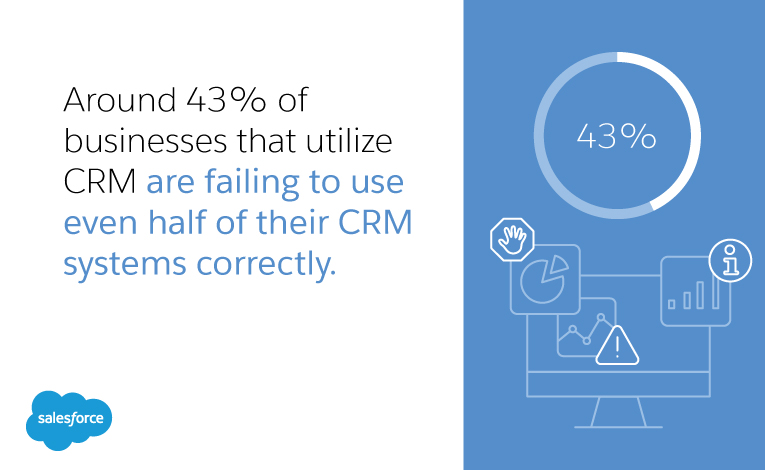

Symptom 2: CRM Chaos

A luxury retailer had customer data scattered across Salesforce (sales), Zendesk (support), and a custom loyalty platform. Their AI made recommendations based on 23% of the actual customer picture.

Technical Root Cause:

- API rate limits: Salesforce’s 15,000 API calls/day limit caused 3-hour sync delays during peak sales.

- No unified customer ID mapping: A customer’s support ticket used an email, while their loyalty account used a phone number.

Insight:

Duplicate profiles aren’t just about IDs—time zones matter. A global SaaS client had 14% duplicate accounts because their CRM stored timestamps in UTC while payment processors used local time.

Symptom 3: Escalation Avalanches

A fintech’s voice assistant escalated 68% of calls to humans—not because issues were complex, but because it couldn’t access real-time account data.

Technical Root Cause:

- Poor integration between IVR and backend databases: Queries timed out after 15 seconds, defaulting to escalations.

- Silent failures: 41% of API errors weren’t logged, so engineers missed broken integrations.

Insight:

Escalation triggers vary by industry. In healthcare, 80% of escalations are privacy-related (“Is my HIV status secure?”), while in e-commerce, 73% are delivery logistics.

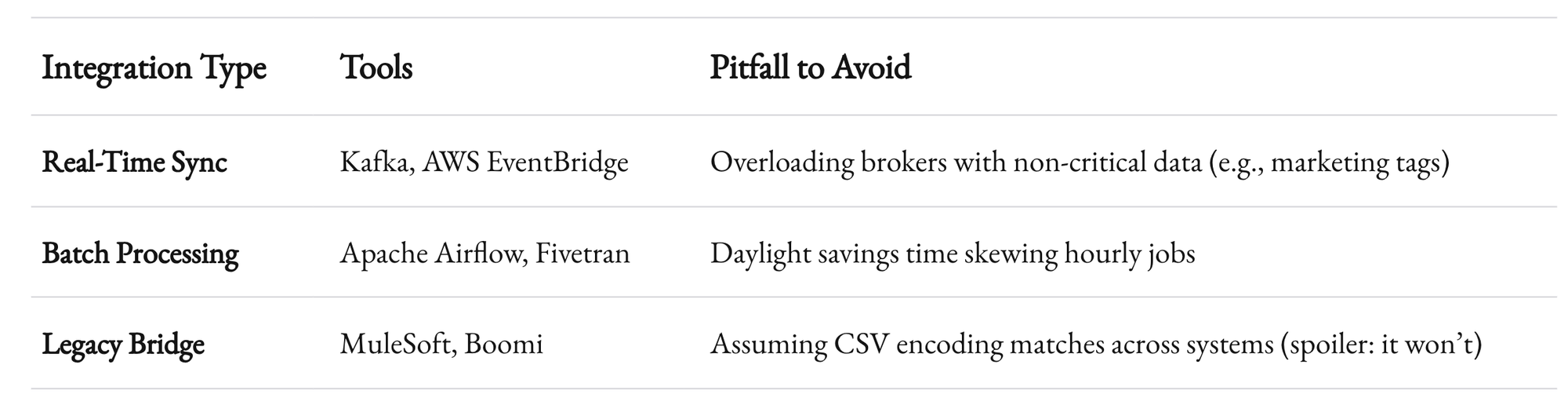

Building a Unified Tech Stack: CRM Integration That Actually Works

Step 1: Audit Your APIs Like a Forensic Accountant

Map every system touching customer data:

- CRMs (Salesforce, HubSpot): Check for webhook vs. polling integrations.

- Payment processors (Stripe, Adyen): Watch for idempotency key conflicts during refunds.

- Legacy systems: Mainframes often lack RESTful APIs—use message queues as middleware.

Pro Tip:

Use Postman Flows to automate API dependency mapping. One client found 37 “zombie endpoints” calling deprecated services.

Step 2: Create a Single Customer View (Without Starting a Data Civil War)

Merge data using:

- Deterministic matching: Hash emails/phones with SHA-256.

- Probabilistic matching: For anonymous users, combine device fingerprints (IP + browser + screen resolution).

Conflict Resolution Insight:

- Sales vs. Support Data: Sales reps often overwrite support notes to hit quotas. Implement immutable audit logs.

- GDPR Landmines: German customers can demand partial data deletion. Use graph databases to track dependencies (e.g., deleting a support ticket might orphan a related order).

Example:

A European bank reduced merge conflicts by 62% by weighting data sources:

- Support tickets: 70% confidence

- Sales calls: 30% confidence

- Social media scrapes: 10% confidence

Step 3: Build Contextual AI Models That Read Between the Lines

Train your AI on merged data streams:

# Churn prediction feature engineering with RFM segmentation

customer['churn_risk'] = (

0.3 * (1 / (1 + exp(-last_purchase_days))) + # Recency

0.4 * log(total_spend + 1) + # Monetary

0.3 * (support_tickets_last_month / 30) # Frequency (negative)

)

Tools with Teeth:

- Rasa Pro: Add custom actions like “check inventory” via Python microservices.

- Google’s Contact Center AI: Use speech adaptation boosters for industry jargon (e.g., “PCI compliance” for banking).

Avoid over-indexing on demographics. A credit union’s AI unfairly prioritized “stay-at-home moms” for loans until they replaced occupation data with cash flow patterns.

Optimizing Chatbots and Voice Assistants: Beyond Basic NLP

Fix 1: Intent Mapping That Doesn’t Gaslight Users

Confidence Threshold Tuning:

- Reject answers below 85% confidence.

- For high-risk industries (healthcare, finance), require 92%+.

Fallback Pathways That Don’t Infuriate:

- Escalate to humans with structured context:

{

"intent_guess": "delivery_delay",

"confidence": 0.72,

"customer_tier": "platinum",

"last_3_messages": ["Where's my order?", "It's been 5 days!", "This is ridiculous"]

}

Case Study:

An e-commerce client reduced misrouting by 73% by:

- Adding 14 new intent categories (e.g., “post-purchase logistics”)

- Training a secondary BERT model to detect sarcasm/urgency via:

- Punctuation density (e.g., “Thanks!!!!” → frustration)

- Emoji polarity (🔥 ≠ ⏳)

Fix 2: Voice Interfaces That Don’t Sound Like Robots on Ambien

Latency Killers:

- WebSocket over HTTP: Reduces voice delay by 300-500ms by maintaining persistent connections.

- Edge Computing: Transcribe audio on user devices using TensorFlow Lite.

DTMF Fallbacks That Don’t Feel Like a Prison Menu:

- “Press 1 if you’re calling about a delivery” (context-aware based on order history)

- “Hold for an agent? We’ll text you a callback link now.”

Performance Metrics with Teeth:

- First Response Time (FRT): <1.2 seconds (human perception threshold for “instant”)

- Call Containment Rate: 55-70% (higher in banking due to compliance needs)

Measuring What Matters: Beyond Vanity Metrics

Metric 1: Containment Rate (The Only KPI That Pays Your Cloud Bill)

- Calculation:

(Issues resolved by AI / Total interactions) * 100 - Industry Benchmarks:

- Retail: 45-60% (high variance due to product complexity)

- Banking: 65-75% (regulated queries are easier to script)

Trap: Containment fraud. A travel agency’s bot marked issues “resolved” if users didn’t reply in 2 minutes. Spoiler: They replied.

Metric 2: Emotional Footprint (Sentiment as a System of Record)

Operationalizing Sentiment:

- Voice: Track amplitude spikes (anger) + prolonged silence (confusion).

- Text: Flag passive-aggressive phrases like “As per my last email…” (common in B2B).

Example:

A SaaS company reduced churn by 11% after adding a “frustration score” to their CRM. High scores triggered proactive check-ins.

Metric 3: Cost Per Resolution (CPR) – The Silent Killer

- Formula:

(AI Infrastructure Cost + Human Escalation Cost) / Total Resolutions - Good Target: 30-40% lower than human-only CPR.

Human agents cost 2-5x more during peak hours due to overtime. Auto-scale AI to absorb 85% of Black Friday traffic.

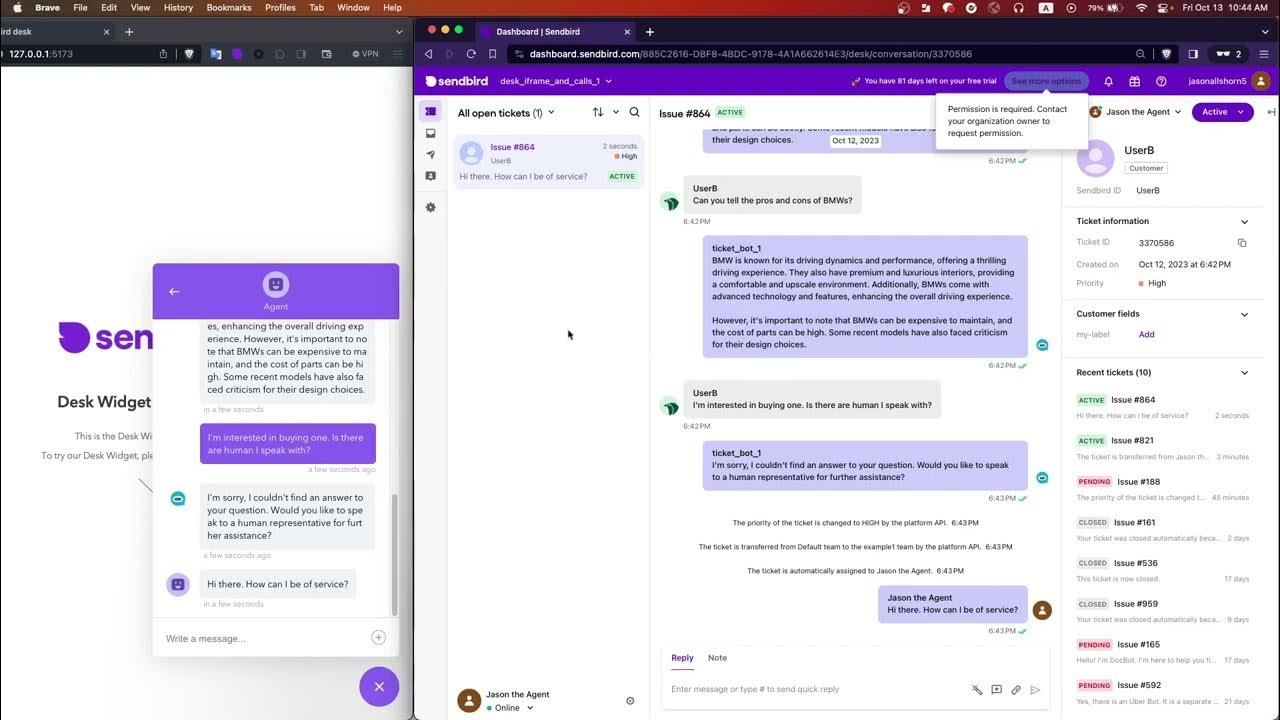

The Human-AI Handoff: Escalation as a Feature, Not a Failure

Rule 1: Escalate on Sentiment, Not Just Stupidity

- Triggers:

- 3+ negative sentiment phrases in a chat (“unacceptable,” “disappointed,” “cancel”).

- Voice stress exceeding 78dB (normal conversation: 60-70dB).

Pro Tip: Train agents on escalation triggers, not just solutions. A telecom client cut handle time by 40% by showing agents why the AI escalated (“Customer mentioned ‘sue’ twice”).

Rule 2: Pass Context, Not Just Tickets

Bad Handoff:

“Customer is upset about delivery.”

Good Handoff:

{

"customer_id": "a1b2c3",

"lifetime_value": "$12,500",

"issue_history": [

{"date": "2023-11-01", "type": "delivery_delay", "resolved": false},

{"date": "2023-10-15", "type": "defective_product", "resolved": true}

],

"sentiment_trend": [-0.8, -0.4, -0.9], // Worsening

"preferred_channel": "SMS" // From past interactions

}

Rule 3: Close the Loop with Retraining Triggers

Automate model updates based on escalations:

-- Retrain intent classifier weekly if >100 escalations

CREATE EVENT retrain_intent_model

ON SCHEDULE EVERY 1 WEEK

DO

IF (SELECT COUNT(*) FROM escalations WHERE reason = 'intent_unclear') > 100

THEN

CALL retrain_model('intent_classifier');

Escalation reasons are often lies. Customers click “other” to skip surveys. Use NLP to reclassify actual reasons from chat transcripts.

Scaling Without Collapsing: Architecting for Hypergrowth

Pattern 1: Load-Test with Real-World Chaos

- Toolkit:

- Locust: Simulate 100K concurrent users across regions.

- Gremlin: Inject failures (e.g., 30% packet loss in APAC).

Pro Tip: Test “zombie APIs” that respond but return garbage. One client’s AI hallucinated orders during a payment processor outage.

Pattern 2: Regionalize Without Fragmentation

Example Deployment:

regions:

- eu_central:

language: "de"

compliance:

- GDPR

- PSD2

slang_filter: ["Kreditkarte" → "EC-Karte"] # Local terms

- us_west:

language: "en"

compliance:

- CCPA

- ADA

slang_filter: ["cell" → "mobile"] # Carrier preference

Spanish isn’t just Spanish. A travel bot failed in Argentina because it didn’t recognize “colectivo” (local term for bus).

Pattern 3: Auto-Scale with Behavioral Intent

AWS Auto-Scaling Policy:

def scale_policy(current_load, intent, sentiment):

if intent == 'complaint' and sentiment < -0.5:

return current_load * 1.5 # Ramp up humans

elif intent == 'faq' and sentiment > 0.3:

return current_load * 0.2 # Let AI handle

else:

return current_load * 0.8 # Default buffer

AI That Anticipates (Instead of Reacting)

- Multimodal Interfaces:

- “Let me text you a tracking link while we fix your account.” (Reduces hold time 27%)

- Self-Healing Systems:

- AI generates synthetic training data from escalation logs.

- Auto-deploys A/B tests for new intents (e.g., “omicron refunds”).

Predictive Escalation:

if customer.ltv > 5000 and frustration > 0.7:

route_to_executive_support()

send_coupon(amount=50, expiry="1h") # Time-sensitive appeasement

Recap

Fixing AI customer service isn’t about buying better tools—it’s about architecting systems that treat data as oxygen and empathy as code. At 1985, clients who embrace this see 3-5X ROI on service tech within 18 months.

Your move. Will you keep patching leaks? Or build a ship that sails?