Integrating ChatGPT in Mobile Apps: A Comprehensive Guide

From backend setup to balancing brand tone, learn everything about integrating ChatGPT into mobile apps in this in-depth guide."

Why adding a chatbot isn’t the hard part - but making it actually useful is.

Let’s get one thing out of the way: slapping ChatGPT into your mobile app is not rocket science anymore. Every second indie project on Product Hunt has some form of AI chat bolted onto it. But most of those integrations? They’re glorified demos - shiny wrappers with zero thought around user context, data flow, or what happens when the model hallucinates your user into a lawsuit.

In this guide, I’ll walk you through how to properly integrate ChatGPT (or its API equivalents) into a mobile app - especially if you're building for real-world users, not just weekend hackathon judges. Whether you’re a scrappy founder or a product engineer elbow-deep in Swift or Kotlin, this one’s for you.

Welcome to the “Model-as-a-Feature” Era

It’s not about AI-first. It’s about user-first with AI where it counts.

Back in our Bangalore office, we once shipped an AI-powered onboarding assistant inside a fintech app. On paper, it looked neat: users could “ask anything.” In production? It turned into “ask anything and we’ll confuse you in five languages.” We learned fast: LLMs need structure, not just swagger.

Today’s users don’t want generic. They want:

- AI that knows their context (without retyping their life story)

- Instant responses that don’t kill battery life or bandwidth

- Language that feels human, but not creepy or overfamiliar

Building that takes more than dropping OpenAI’s endpoint in your code. It means thinking like a product person, not just a prompt engineer.

Use Case or Useless Case?

The first decision isn’t technical - it’s philosophical.

Just because you can add ChatGPT doesn’t mean you should. So, before you write a single line of integration code, ask yourself:

Is this a feature or a crutch? We’ve seen mobile apps add AI chat for:

- Customer support triage (great, if well-trained on your actual FAQs)

- Writing helpers in note-taking or journaling apps (solid, if local context is baked in)

- Conversational onboarding (only works if you define the funnel first)

- Coaching or therapy bots (huge risk if unmoderated or not medically vetted)

- Search replacement (“Ask anything about your orders”) - doable, but guardrail-heavy

In our experience, the best use cases:

- Have bounded scopes (“Summarise this PDF I uploaded” > “Talk to this PDF”)

- Use ChatGPT to enhance - not replace - core UX

- Have user-specific memory, not just chat logs

Remember: the model doesn’t know your app’s schema or business logic unless you tell it. And prompt tokens are not a substitute for product thinking.

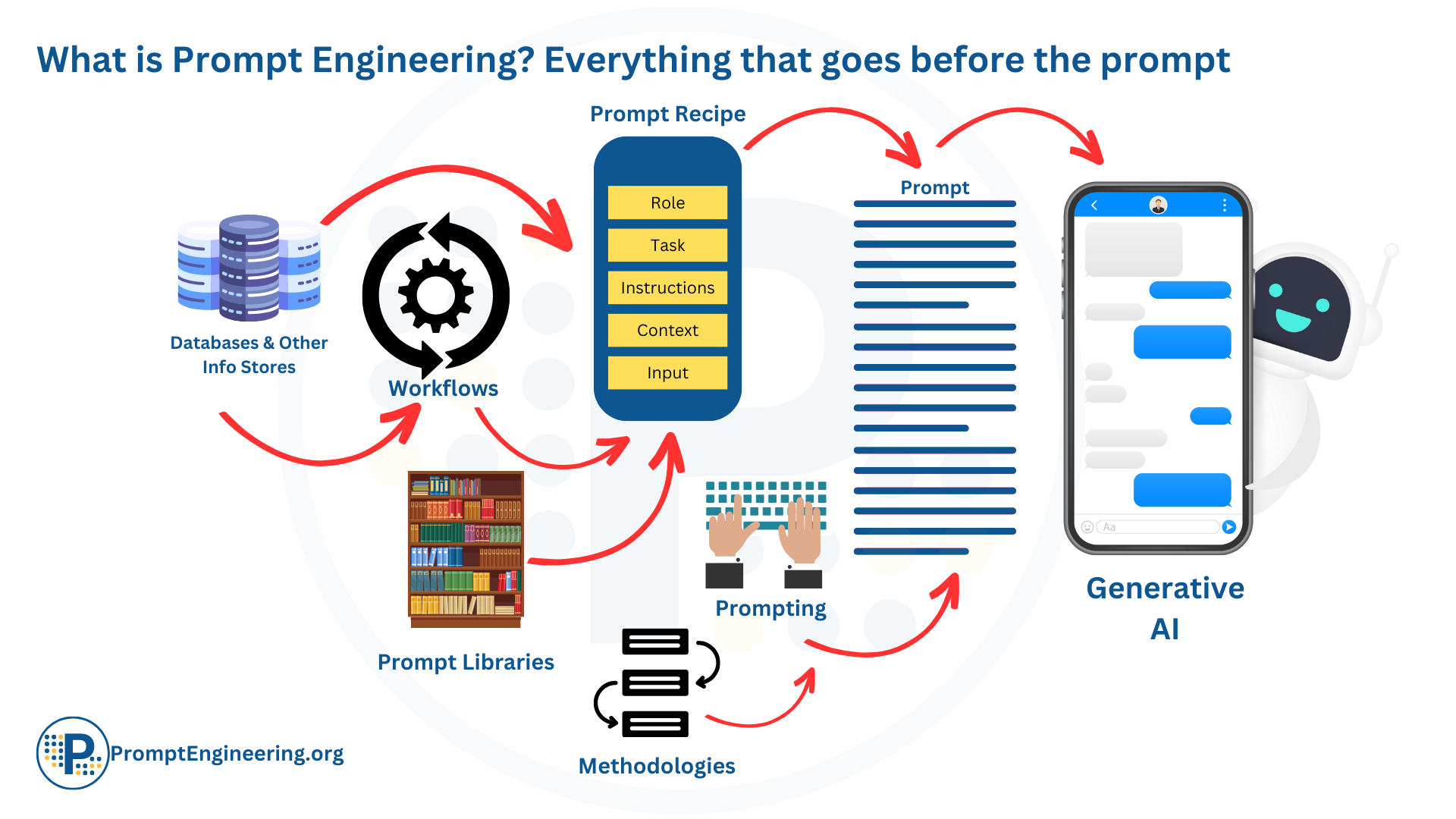

Prompt Engineering Is Just the Start

There’s no such thing as a one-size-fits-all prompt - especially in mobile.

Let’s say you’re building a language learning app. You want ChatGPT to roleplay as a French tutor. Great idea. But that means:

- Few-shot examples that mimic real student-teacher interaction

- Guardrails for inappropriate or off-topic responses

- A fallback flow if the API times out or throws gibberish

In production, we wrap all our prompts in a templating layer - usually a backend service that injects app state (user preferences, recent actions, metadata) into the prompt dynamically.

Oh, and forget relying on one prompt forever. You will need A/B testing for different prompt styles, tones, and levels of formality. Think of it as promptOps - an actual discipline now.

A few rules of thumb that have saved us hours of debugging:

- Always inject role and tone explicitly (“You are a polite, friendly app assistant…”)

- Cap response length with precision (“Reply in 2 sentences or less”)

- Prepend system messages that simulate app context (“This user is learning Spanish at beginner level…”)

If your prompt has more disclaimers than a cigarette pack, you’re doing it right.

Architecture: Local UI, Cloud Brain

This is where most teams trip up. They think of ChatGPT like a button. But it’s more like a mini operating system.

Your integration architecture should separate:

- UI/UX Layer: The mobile app interface and local state handling

- Inference Gateway: Your backend service that talks to the OpenAI API (or other LLMs)

- Memory/Context Store: Where you keep structured past interactions (user history, feedback, settings)

- Orchestration Layer: Where business logic meets prompt generation

Here’s our typical stack for mobile + GPT:

- React Native or Swift/Kotlin frontend

- Node.js or Python FastAPI backend (sits between app and LLM API)

- Redis/PostgreSQL for context memory

- Cloudflare Workers or Vercel Edge Functions for low-latency caching

- OpenAI API, Claude, or Mistral depending on use case and cost

Don’t forget analytics. We log every user message + model response + latency + feedback flag into a warehouse (BigQuery or Clickhouse). Without this, you’re flying blind.

Pro tip from a recent sprint: use [LangChain Expression Language (LCEL)] or [PromptLayer] if you want visibility into which prompt versions are triggering which user outcomes.

Safety, Speed, and Sanity Checks

Your app will go viral the day it says something offensive.

Mobile apps with ChatGPT need fail-safes at every layer. Some basics that saved our asses more than once:

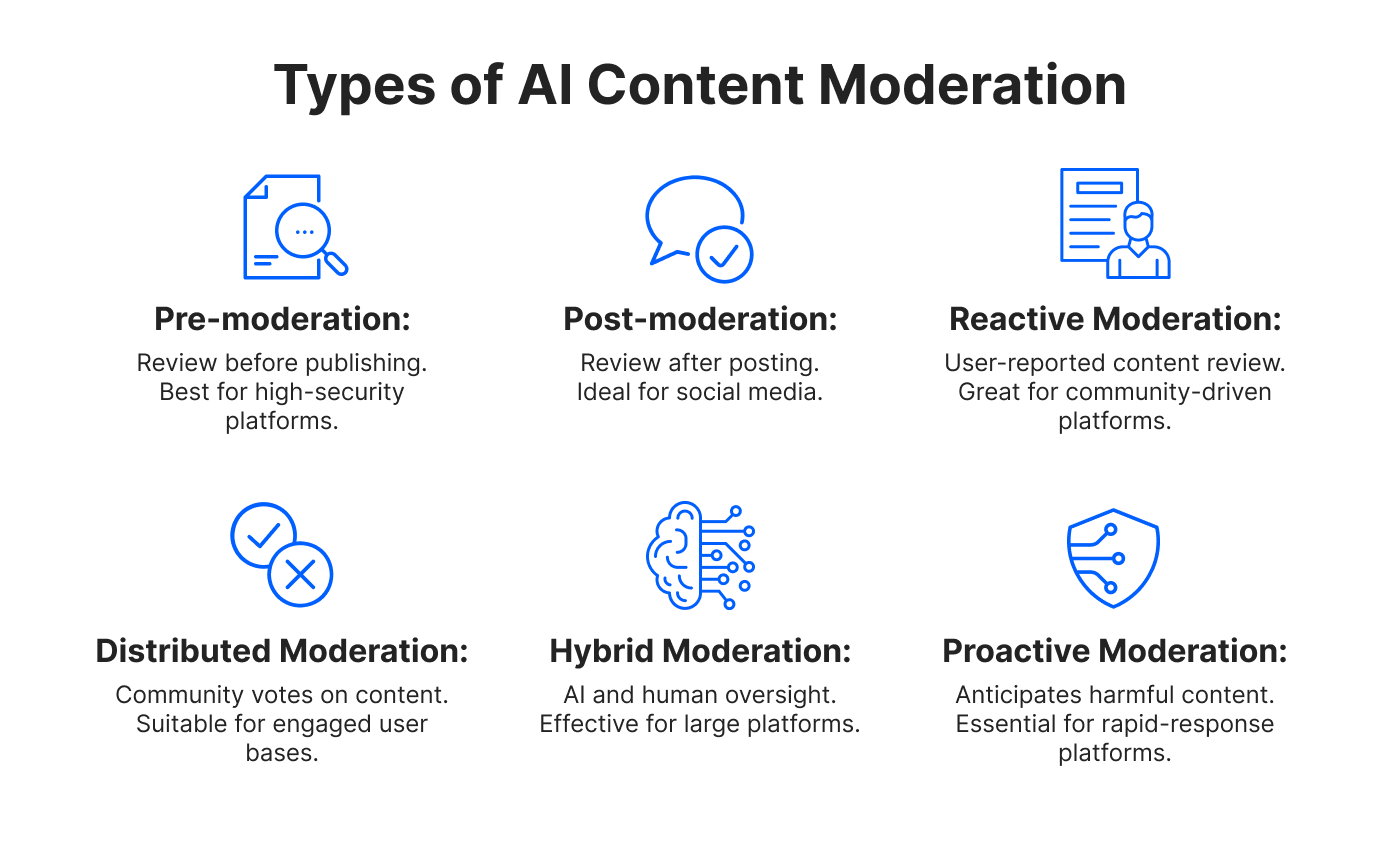

- Moderation: Run inputs and outputs through OpenAI’s moderation API or your own filters. We once had a user ask our AI coach for “ways to escape taxes” and got a detailed plan. Not cool.

- Rate limiting: Prevent prompt spam or infinite retries from flaky clients.

- Latency fallback: If the API takes >5s, show a canned response or spinner. Users will bounce otherwise.

- Token control: Mobile apps have limited screen real estate. Cap response tokens or risk scroll-induced thumb injuries.

- Retry logic: Models sometimes time out or error. Don’t dump that on the user. Retry once, then fallback.

And remember: mobile users aren’t sitting at a desk. They're in a metro, half-asleep, juggling a chai. Your AI better not crash because the WiFi dropped mid-response.

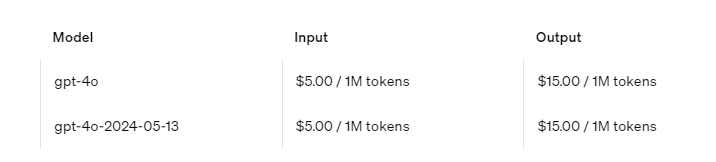

Pricing and Rate Limits Will Surprise You

Welcome to the “optimise every token” economy.

You’re not running a chatbot - you’re running a cost centre. OpenAI charges by token. So every verbose reply, long prompt, and multi-turn chat eats into your margins.

From our past few builds, here are cost-cutting tactics that actually worked:

- Precompute where possible. If a response is static or based on a known dataset, cache it.

- Use smaller models (gpt-3.5-turbo > gpt-4) unless the complexity requires GPT-4.

- Cap user interactions (e.g., 10 free messages/day) and offer premium plans for power users.

- Compress memory. Instead of feeding 10 full chat messages into context, summarise them and inject only what's needed.

And for the love of Shahrukh Khan, don’t stream every word. Unless you're building a roleplay therapist, batch the response or use typewriter animation to fake streaming.

Top 5 Mistakes We’ve Seen (And Made)

Here’s the greatest hits compilation from our own (slightly painful) AI app builds:

1. “We forgot to version prompts.” Changing a prompt live broke downstream analytics. Now every prompt version gets a UUID.

2. “The AI gave legal advice.” We didn’t add disclaimers. User thought it was official. Now we preface risky replies with “I’m not a lawyer, but…”

3. “ChatGPT said something in Hindi. We didn’t ask for that.” Language was inferred from user name. We now specify language in the system prompt.

4. “The chat history vanished after app crash.” No persistence layer. Now we store interaction summaries in encrypted local DB + server-side backup.

5. “App was too slow in low bandwidth areas.” Now we use hybrid approach: canned responses for common queries + ChatGPT fallback.

Don’t wait to learn these the hard way.

Reality Check: Do You Need ChatGPT or Just Good UX?

A dirty secret: some of the best “AI” features are just autocomplete, fuzzy search, or rules-based scripts.

Before you wire in OpenAI, ask:

- Can we solve this with structured inputs + known outputs?

- Does the model improve user experience more than 15% over deterministic logic?

- Can we explain its output if the user asks “why?”

If the answer is “No” to all three, maybe don’t add LLMs yet. Better a crisp UX than a confused chatbot.

Bonus Bytes: Your AI App Integration Checklist

✅ Use case is narrow, well-defined, and adds real user value

✅ Prompt design includes system message, examples, and caps

✅ You have a backend orchestration layer for injecting context

✅ Inputs and outputs are moderated and rate-limited

✅ You track every token, cost, and user rating for responses

✅ You’ve tested on 3G networks and old devices

✅ Legal, privacy, and safety disclaimers are in place

✅ You’ve planned for versioning, analytics, and rollback

✅ You’ve asked: “Would this feature still be valuable without AI?”

Wrap-up

ChatGPT is a tool - not a magic wand. When used thoughtfully, it can unlock delightful, sticky user experiences. When bolted on for the sake of buzzwords, it’ll just bloat your app and confuse your users.

We’ve built, broken, and rebuilt AI features inside real-world mobile apps - from productivity tools to coaching platforms. And here’s the truth: the difference between cringe and clever is context. Don’t just integrate the model. Design for the moment.

Want to brainstorm your app’s ChatGPT integration over chai? Ping me. Or better, fire up a sprint with 1985’s Bangalore crew.

FAQ

1. What’s the best use case for ChatGPT in a mobile app?

The best use cases are focused, high-context, and enhance your core UX—like onboarding assistants, contextual writing helpers, or FAQ bots fine-tuned on your actual product data. Generic “Ask me anything” bots rarely add real value and often confuse users.

2. Do I need to use OpenAI’s GPT-4 or is GPT-3.5 good enough?

Unless your use case involves nuanced reasoning, multilingual support, or complex logic, GPT-3.5 is faster, cheaper, and perfectly capable for most mobile tasks. Always prototype with 3.5 first and upgrade only if user experience truly demands it.

3. How do I structure the prompts for best results on mobile?

Use system messages to set the assistant’s role, tone, and context. Inject user-specific data dynamically through a backend layer. Keep prompts short, cap response length, and avoid ambiguity. Prompt versioning is essential—track every change.

4. What’s the ideal architecture for integrating ChatGPT in a mobile app?

Keep your UI lightweight and delegate prompt construction and API calls to a backend gateway. Store interaction context in a memory layer (like Redis or a database) and use caching and retries to optimise latency. Separate business logic from chat logic.

5. How can I handle safety and moderation concerns?

Run both input and output through OpenAI’s moderation API or your own filters. Add disclaimers to sensitive categories like legal, health, or finance. Limit topics via prompt scope and use fallback responses for flagged queries.

6. What do I do when the API response is slow or fails?

Set timeouts and show spinners or canned fallback responses after a delay. Implement retry logic with exponential backoff. If speed is critical, precompute frequent queries and cache responses to reduce dependency on live API calls.

7. How should I manage costs when using ChatGPT at scale?

Optimise prompts to minimise token usage, compress memory into summaries, and throttle user access based on plan tiers. Use GPT-3.5 by default and only invoke GPT-4 for premium or complex tasks. Track usage per user for billing or analytics.

8. Can ChatGPT replace my traditional search or help centre?

Only partially. For common queries, a well-prompted GPT model can outperform static FAQs, but for compliance-heavy or structured knowledge, traditional search remains more reliable. Consider hybrid models that use GPT for conversational UX and fallback to search indexes.

9. Should I store chat history on-device or server-side?

For lightweight interactions, local storage works, but anything involving memory, multi-device sync, or analytics needs server-side storage. Encrypt sensitive data and always offer users a way to clear or export their history.

10. How do I test and improve the model’s responses over time?

Log every user input, model response, and feedback signal. Run A/B tests on different prompts and track impact on retention, CSAT, or engagement. Build a promptOps workflow to version prompts, monitor hallucinations, and deploy safe rollbacks.