Offshore Rates 2025: A Blind Tender Experiment Across 12 Countries

Offshore pricing is only half the story. Here's what dev teams in 12 countries quoted us-and how they actually showed up.

We ran a pricing experiment across 12 countries to answer the question every CTO secretly asks: “Are we overpaying for our offshore team?”

Offshore rate cards are like Tinder bios - everyone claims to be flexible, efficient, and fluent in English. But swipe right, and you may find yourself ghosted mid-sprint or cleaning up a spaghetti codebase six months later.

Every year, some consultancy drops a slide deck showing average hourly rates by region. Poland $50/hr, India $25/hr, etc. But those PDFs never tell you what you actually get for those rates—or what it feels like to work with that team across three time zones and two missed standups.

So we decided to test it ourselves. No whitepapers. No sales calls. Just a controlled experiment that asked the only question that matters: what’s the real delta between price and quality in offshore dev markets today?

How We Set the Stage

The brief was simple: build a mid-scope SaaS MVP—let’s call it “AirTable for inventory.” Think CRUD-heavy, some light integrations, auth layer, and a decent UI. Tech stack: React, Node, Postgres, AWS. Three-month timeline. Realistic, but tight.

We created a clean spec, setup a burner email and fake founder LinkedIn profile, and sent it to 36 dev shops across 12 countries. No branding, no hint we were a dev firm ourselves. Everyone got the same deck. No negotiation, no interviews. We asked for:

- A quote in USD

- Estimated timeline

- How they’d approach the build

- Optional: code samples, team bios, or past work

The catch? We wouldn’t reveal the company behind the brief—until after we had their proposals.

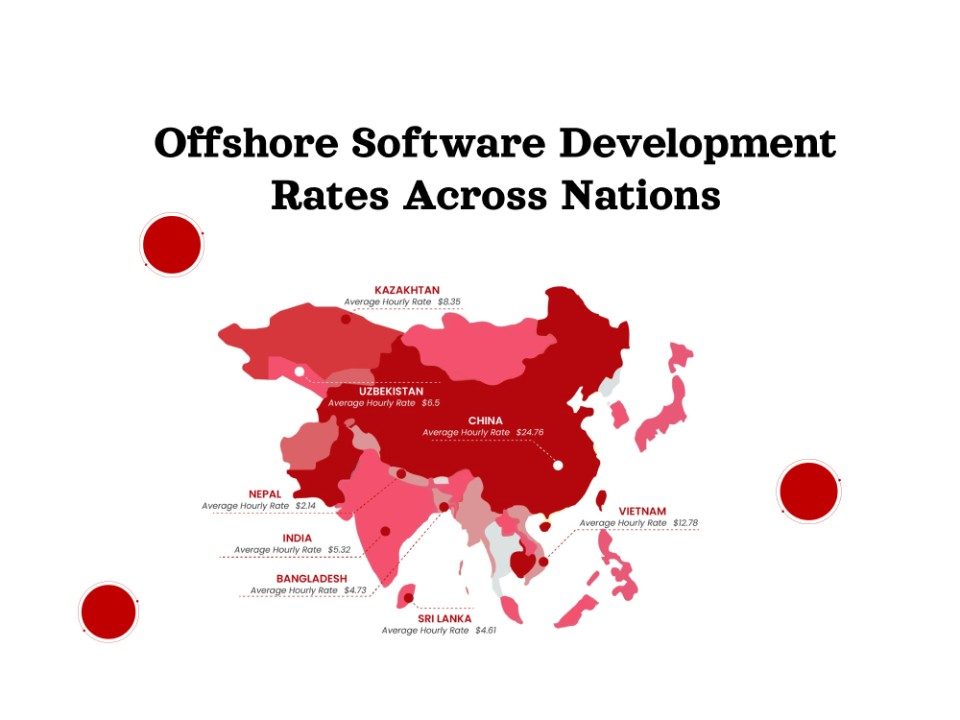

The 12 Countries We Chose (and Why)

We didn’t spin the globe and stop at random. These are the countries that consistently show up in RFPs from US and EU startups—either because of price, talent pool, or sheer inertia:

- India

- Vietnam

- Philippines

- Poland

- Ukraine

- Romania

- Brazil

- Mexico

- Egypt

- South Africa

- Nigeria

- Indonesia

Some are well-established outsourcing destinations. Others are emerging ecosystems trying to climb the value chain. A few—like Nigeria and Egypt—are often written off entirely, which made us doubly curious.

What the Bids Told Us (and Didn’t)

Let’s start with the table. This is what came back from each country’s top three shops (anonymized to keep the playing field fair):

| Country | Lowest Quote | Highest Quote | Avg Timeline | Code Samples Sent | English Comms |

|---|---|---|---|---|---|

| India | $16K | $32K | 12 weeks | 2/3 | Functional to Fluent |

| Vietnam | $14K | $26K | 11 weeks | 3/3 | Decent, brief |

| Philippines | $18K | $30K | 13 weeks | 1/3 | Strong |

| Poland | $35K | $58K | 10 weeks | 3/3 | Excellent |

| Ukraine | $28K | $45K | 10–12 weeks | 3/3 | Strong |

| Romania | $26K | $40K | 10 weeks | 2/3 | Solid |

| Brazil | $22K | $39K | 12 weeks | 2/3 | Conversational |

| Mexico | $24K | $42K | 11–13 weeks | 2/3 | Fluent |

| Egypt | $12K | $22K | 14 weeks | 1/3 | Passable |

| South Africa | $20K | $36K | 13 weeks | 1/3 | Fluent |

| Nigeria | $10K | $18K | 15 weeks | 0/3 | Functional |

| Indonesia | $14K | $27K | 12 weeks | 1/3 | Okay-ish |

But here’s the thing: the spread in pricing isn’t what surprised us. It was the confidence gap between teams who’d built 30 products and those who still treat MVPs like fixed-scope web dev projects.

Where the Real Signal Lived

When we parsed through all 36 proposals, some surprising patterns emerged:

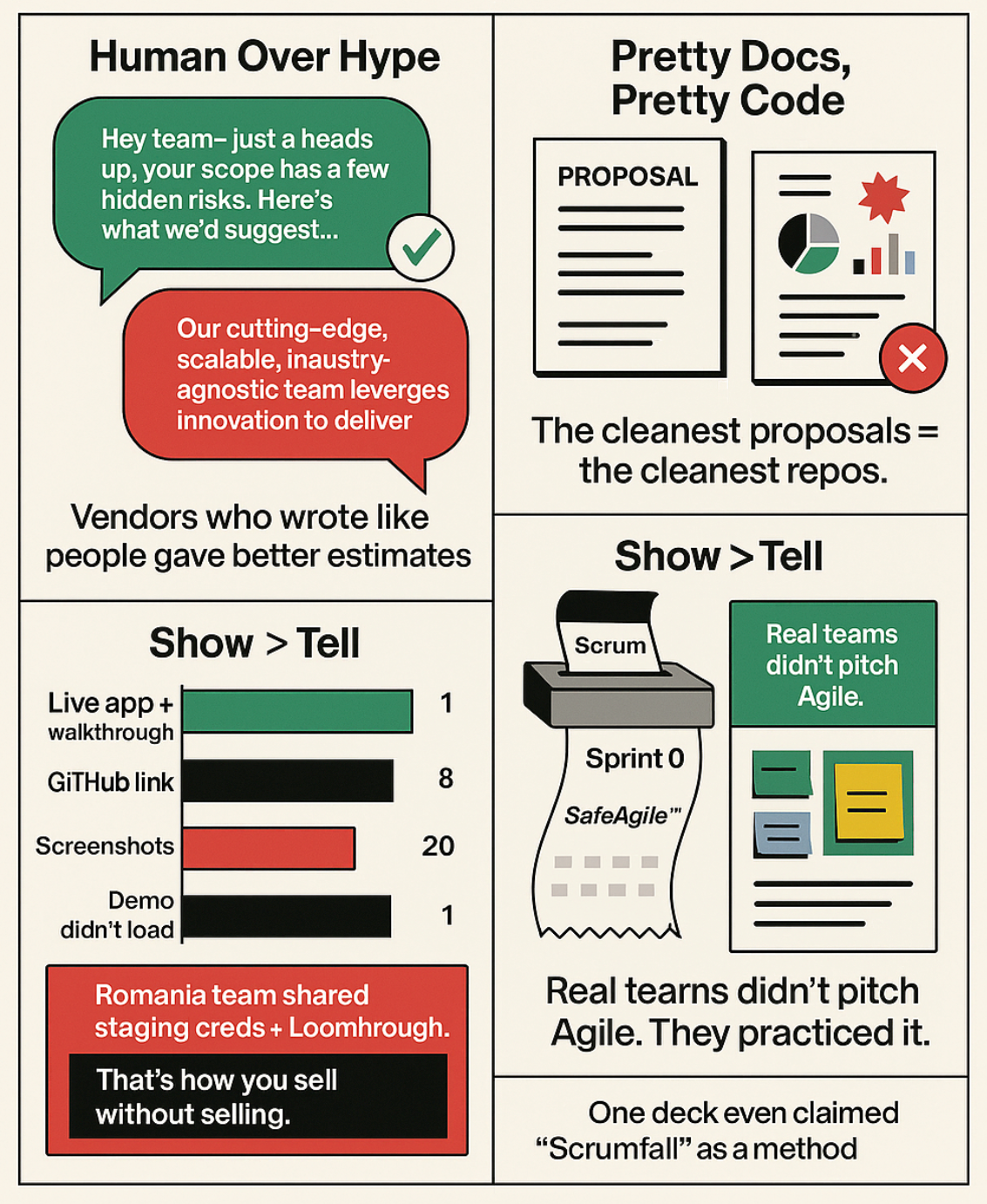

- Communication style was the biggest early predictor of quality. Teams who wrote like humans—not sales bots—almost always submitted the most thoughtful estimates. Their proposals flagged potential gotchas in our spec. They showed they’d built similar things. They didn’t bury us in jargon.

- Aesthetics mattered. No one hires a dev shop for their slide design… but the teams that submitted tight, clean, well-structured docs often wrote cleaner code. Coincidence? We don’t think so.

- The repo test was brutal. Of the 36, only 9 shared actual GitHub links. Most sent screenshots. A few offered password-protected demos that didn’t load. One team in Romania stood out by sharing a live app, staging credentials, and a walkthrough Loom. That’s how you sell without selling.

- The “agile” deck arms race is real. Half the vendors included some kind of Agile infographic. Most were copy-pasted. One even cited “scrumfall” as their preferred model. The best teams? Just showed us what their sprint process looks like today—no buzzwords.

Three Conversations That Stuck With Us

- Mexico

One PM replied with: “Just so we’re clear—are you open to async daily updates via Loom or do you want live standups?” We hadn’t even asked. That’s cultural alignment in one sentence. - Vietnam

A lead engineer responded to our RFP with a 5-line breakdown of our tech stack risks and asked if we’d considered SQLite for mobile sync. Thoughtful, humble, and sharp. - South Africa

Their estimate was 30% higher than others. But they gave us a mini case study of a similar build, showed us before/after screenshots, and explained how they’d think about reducing downtime during deployment. High price, but high clarity.

The Myth of the “Best Country to Outsource To”

If you’re hoping we’ll crown a winner—sorry. The truth is way messier.

India had both the highest variance and the widest spectrum of quality. Poland delivered polished proposals but not everyone needs (or can afford) $50/hr rates. Nigeria’s pricing was tempting but response quality varied heavily. Vietnam surprised us—tech-forward, responsive, and underpriced for what we got.

So instead of a leaderboard, here’s a mindset shift:

Stop comparing countries. Start evaluating companies.

The country is just the setting. You’re still hiring a cast.

Five Filters That Matter More Than Hourly Rate

Want to skip the roulette table next time? Use these filters:

- Proof of build, not just pitch. Ask for real work samples. Not decks, not mockups. GitHub, staging links, Figma flows.

- How they question your brief is the test. Did they ask about edge cases? Scope creep? Deployment pipelines? Silence = red flag.

- Who’s writing the emails? If you're already three layers deep in sales before a dev speaks, walk away.

- Timezone ≠ communication friction. We had better clarity with teams 10 hours apart than with some 2 hours off.

- Their past clients say more than their website. Ask for references. Bonus: request a project that went sideways and how they handled it.

Rate ≠ Value

Choosing an offshore partner is less like shopping on Amazon and more like hiring a band. Sure, you can compare prices—but can they play in sync? Can they improvise? Will they show up to soundcheck?

Blind rate cards don’t tell you who’ll still be around when your product pivots.

We’ll publish deeper dives on each market in the coming weeks. If you’re caught between a $20K quote and a $42K one, and your gut’s undecided—drop us a note. We’ve done the homework, and we’re happy to walk you through the signal behind the slides.

Need an unfair advantage for your next build? Our offshore squads at 1985 are battle-tested and spreadsheet-verified. Let’s talk.

FAQ

1. Why did you choose these 12 countries for the tender experiment?

We picked countries that repeatedly show up in US/EU startup RFPs across platforms like Clutch, Upwork, and referrals. The goal was to reflect the real-world shortlist of a tech leader scouting offshore talent—balancing traditional players (India, Poland) with rising ecosystems (Vietnam, Nigeria) and “wild cards” that often get overlooked (Egypt, South Africa).

2. Did all vendors get exactly the same project brief?

Yes, down to the file name. We used a cloned Notion spec, structured Figma designs, and even embedded a Google Doc Q&A thread with fixed questions. We ensured there was zero room for misunderstanding due to format or missing context—so the comparison could focus on what mattered: how teams think and respond.

3. How did you evaluate proposals beyond pricing?

We tracked multiple signals: communication tone, technical clarity, risk identification, responsiveness, supporting artifacts (GitHub links, Loom walkthroughs), and even time-to-first-reply. We gave more weight to teams that asked smart questions or flagged assumptions early—because that often predicts lower downstream surprises.

4. Were lower-cost countries always lower quality?

Not even close. Vietnam and Indonesia had standout entries despite pricing 40–60% below Eastern Europe. Meanwhile, one of the most expensive Polish firms sent us a recycled boilerplate pitch. What mattered more was the team’s working maturity, not the GDP of their country.

5. Why did so many shops skip sending code samples?

There are a few reasons. Some firms simply don’t maintain public portfolios due to NDAs or weak DevRel practices. Others tried to hide behind slick pitch decks. But we also saw an uncomfortable pattern: teams that couldn’t produce a real repo often had vague estimates and overpromised timelines. Lack of code = lack of confidence.

6. What role did English fluency really play in team evaluation?

Fluency didn’t mean sounding like a California startup—it meant clarity in writing, thinking, and pushing back. We cared less about grammar and more about whether they could write a ticket, escalate blockers, and describe a trade-off. One Vietnamese engineer wrote simpler English than his peers—but asked the most thoughtful questions. That’s what stuck.

7. How reliable were the timeline estimates across the board?

Timelines varied from 10 to 15 weeks, but only half the vendors explained their reasoning. The best responses broke it down sprint-by-sprint or role-by-role. We flagged vague timelines ("approximately 12–14 weeks") as high-risk—especially when paired with optimistic pricing and thin technical detail.

8. Did time zone proximity translate to better collaboration readiness?

Surprisingly, no. Some LATAM teams assumed availability overlap would compensate for weaker async practices. Meanwhile, a Ukrainian team 10 hours off our fake HQ sent us a sample standup report, a GitHub activity snapshot, and a timezone coverage matrix. It’s not about overlap—it’s about planning and rituals.

9. How did sales vs. engineering balance show up in the proposals?

When sales wrote the whole thing, it showed—fluff, buzzwords, and no grounding in delivery details. The most compelling proposals were usually co-authored or reviewed by an engineering lead, with real tactical insight. One team from Mexico literally signed off with both their PM and tech architect. That kind of shared authorship mattered.

10. Should I use this data to choose a country for offshoring?

Use it to ask better questions, not make blanket calls. Great dev teams exist everywhere, but the way they communicate, estimate, and challenge your brief separates the real partners from the order-takers. This study won’t tell you which country is “best,” but it will help you sniff out the signals that matter—regardless of geography.