Second-Order Thinking on AI & Offshoring: What Most Forecasts Miss

Offshoring isn’t dead. It’s evolving - with AI agents, orchestration loops, and smarter teams.

Why the first-order take - "AI kills offshoring" - misses the deeper systems shift already underway

There’s a hot take doing laps on LinkedIn lately:

“AI will make offshore dev teams irrelevant.”

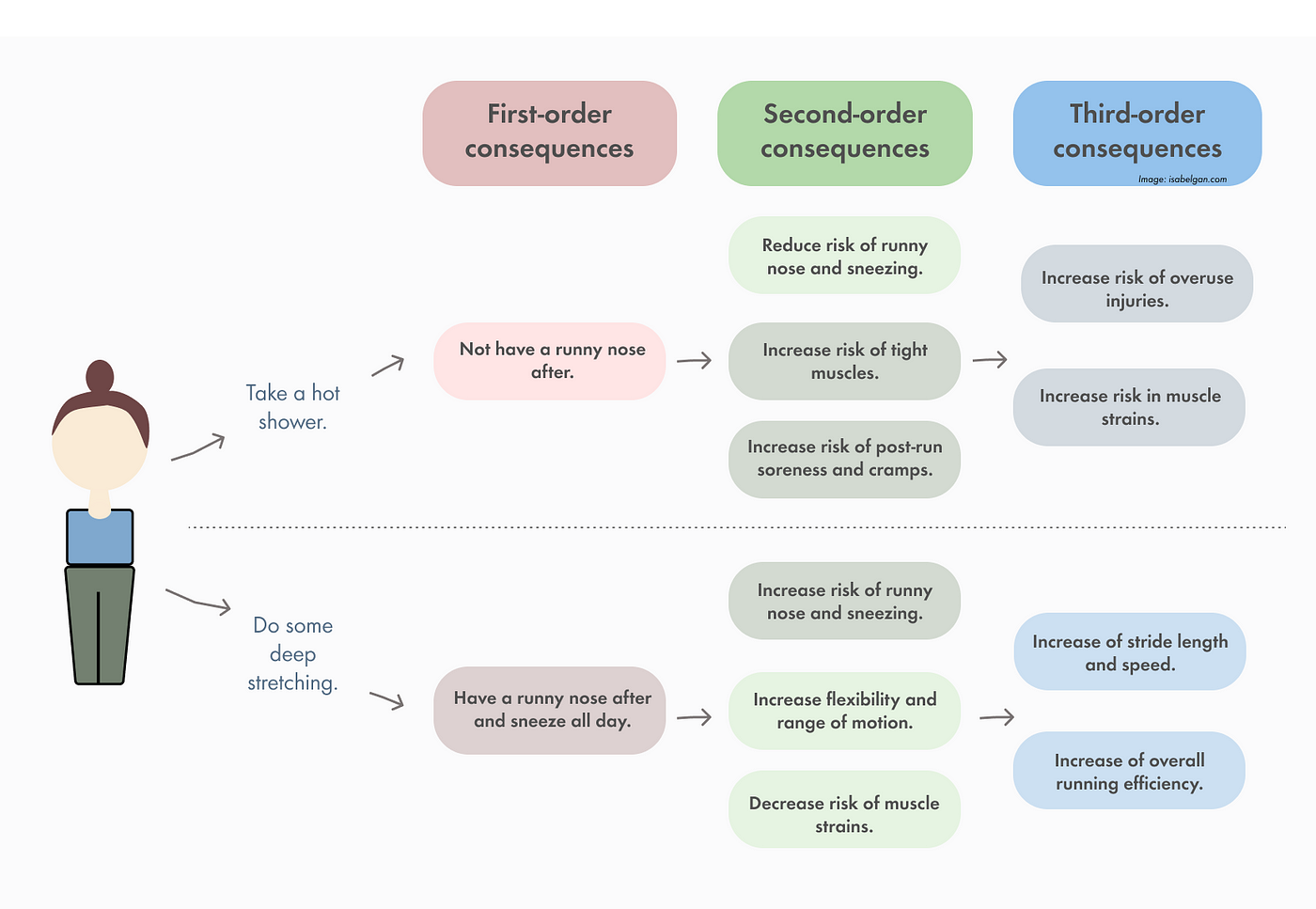

It sounds punchy. Feels futuristic. And it’s dead wrong - if you think beyond the first bounce. Most forecasts stop at first-order thinking (i.e. “AI writes code → fewer engineers needed → bye offshore teams”). But reality doesn’t work in straight lines.

It moves in loops. Feedback loops. System dynamics.

And when you map the loops - how AI agents change team structures, throughput expectations, security risks, hiring logic - you land somewhere very different:

AI doesn’t erase offshore demand. It reshapes it. And if you’re still hiring like it’s 2018, that’s the real risk.

The Straight-Line Myth: First-Order Thinking at Work

Let’s start with the obvious story everyone’s telling.

Companies want to cut costs.

AI tools can now do junior dev tasks.

So why pay for offshore devs anymore?

It’s the same logic that said smartphones would kill laptops (they didn’t), or that no-code tools would replace engineers (they didn’t either). First-order thinking takes the surface-level trend and assumes the rest of the system stays static.

But systems don’t sit still. They push back.

In the case of AI and offshoring, here's what that pushback looks like:

- Increased throughput demands mean product cycles tighten, not slow down

- AI-assisted devs still need QA, security, and review layers

- AI agents generate more ideas than product teams can feasibly ship

- The need for orchestration (not just execution) explodes

In short: you're not replacing humans. You’re reallocating them.

Loop #1: Agents Make Teams Smaller - But Faster. That Needs More Dev Muscle, Not Less

I’ll say the quiet part out loud:

AI does reduce some of the need for large teams. But it also supercharges idea throughput. The bottleneck moves from “Can we build this?” to “Can we integrate, test, ship, and maintain this flood of features safely?”

Imagine your PM team now gets ten viable product ideas per week thanks to GPT-5 and an internal agent running jobs-to-be-done clustering.

Your AI codex writes the scaffolding.

Your solo dev? Drowning.

This is where modular offshore capacity becomes critical. You’re not farming out boilerplate anymore. You’re spinning up lean, AI-augmented units that specialize in:

- QA for AI-generated code

- Test case generation + validation

- Compliance and threat modeling

- Post-agent human QA sanity checks

- Agent lifecycle maintenance (yes, agents need devops too)

Welcome to the age of second-order offshoring.

Loop #2: Orchestration Becomes the Hard Problem

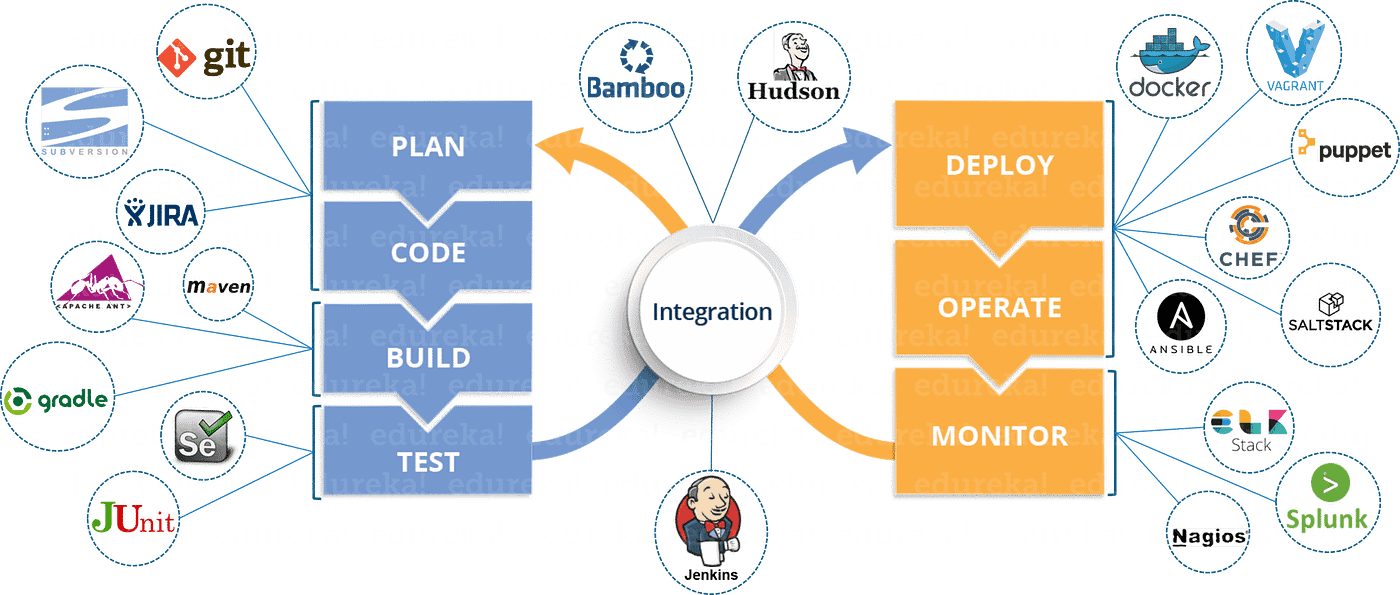

Here’s a fun side effect of AI-agent proliferation:

The more tools you have "helping" your humans, the more coordination nightmares you unleash.

Think Jenkins + Copilot + Retool + LangChain + Slackbot + 4 Zapier scripts + 2 agents managing the PR queue.

It’s DevSecOps meets herding cats.

The core problem shifts from building features to keeping the system legible.

And you can’t automate orchestration away. Not fully. You need teams who:

- Understand both human workflow logic and agent capabilities

- Know how to debug multi-agent behavior

- Can build fallback or override mechanisms (for when your test-runner bot goes rogue at 3 a.m.)

Distributed teams - especially the ones already fluent in async coordination - are well-placed to own this orchestration layer.

In other words: the future belongs to offshoring partners who don’t just ship features. They govern systems.

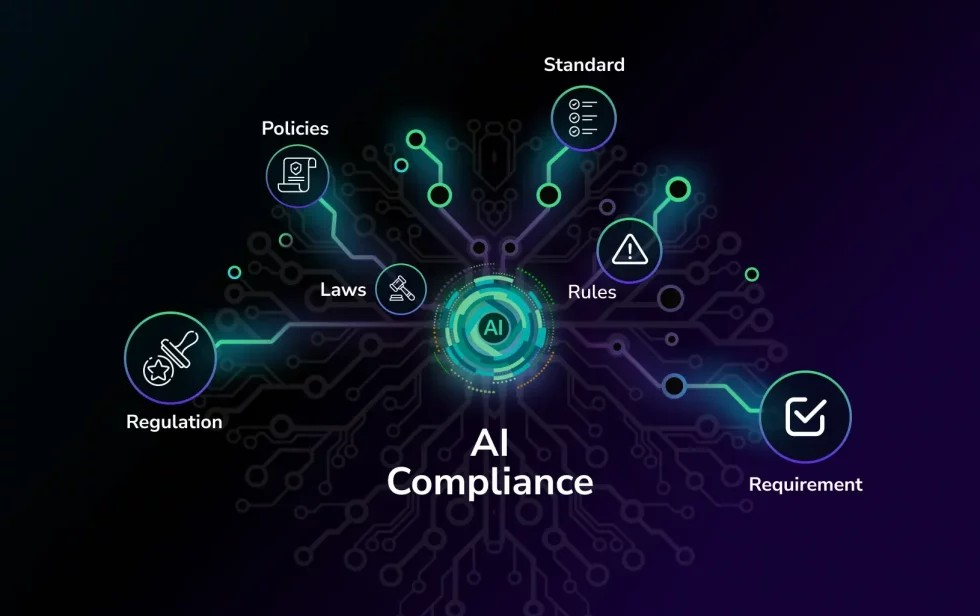

Loop #3: Second-Order Risk, Regulation, and Redundancy

Let’s talk risk.

Companies that once said “move fast and break things” are now going “move smart and document everything.”

Why?

Because:

- AI-generated code can’t explain itself

- AI agents raise novel compliance issues (especially around data privacy)

- CIOs are worried about agent-induced downtime

- Every CFO is building in a redundancy plan for AI hallucinations

So who’s going to write the fallback systems?

Who builds the compliance guardrails?

Who reverse-engineers what GPT stitched together in your infra?

Not the LLM.

Not your PM.

It’s your senior offshore engineer with systems-thinking chops.

At 1985, we’ve already been pulled into “post-agent cleanup” gigs where we’re:

- Refactoring weird Copilot-generated blobs

- Building audit trails for agent decisions

- Adding compliance scaffolding to LLM-heavy workflows

It’s the kind of work that doesn’t show up on Upwork.

But it’s going to explode.

AI’s Impact on Offshore Team Structures

| Function | Pre-AI Era | Post-Agent Era | Net Impact |

|---|---|---|---|

| Frontend dev | Pixel pushing & styling | Integrating LLM UI logic, agents-as-UI | More complex |

| Backend dev | CRUD-heavy | Agent state mgmt, chaining, orchestration | Higher demand |

| QA & Testing | Manual regression | AI-generated tests + human QC | Net new roles |

| DevOps | CI/CD setup | Agent deploys, sandboxing, rollback logic | Much harder |

| PM | Ticket grooming | Agent-aided backlog inflation | Needs more filtering support |

| Security | OWASP Top 10 | LLM input/output monitoring, agent sandboxing | New playbook |

This isn’t a reduction. It’s a recomposition.

If You’re Still Thinking in FTEs, You’re Gonna Miss the Shift

One of the biggest mismatches we’re seeing?

CTOs trying to fit AI-augmented teams into old capacity planning models.

They’re still asking:

“How many engineers do we need for this project?”

Instead of:

“What does the loop look like after we plug in AI? Where does the bottleneck move?”

Hiring for headcount makes sense in a low-tech environment. But in agent-augmented workflows, you need dynamic units that can:

- Spike capacity on orchestration or compliance work

- Embed with PMs to sanity-check AI ideas

- Drop in to maintain agent ecosystems without needing month-long onboarding

That’s where a crew like 1985 thrives.

We don’t sell FTEs.

We build elastic systems muscle.

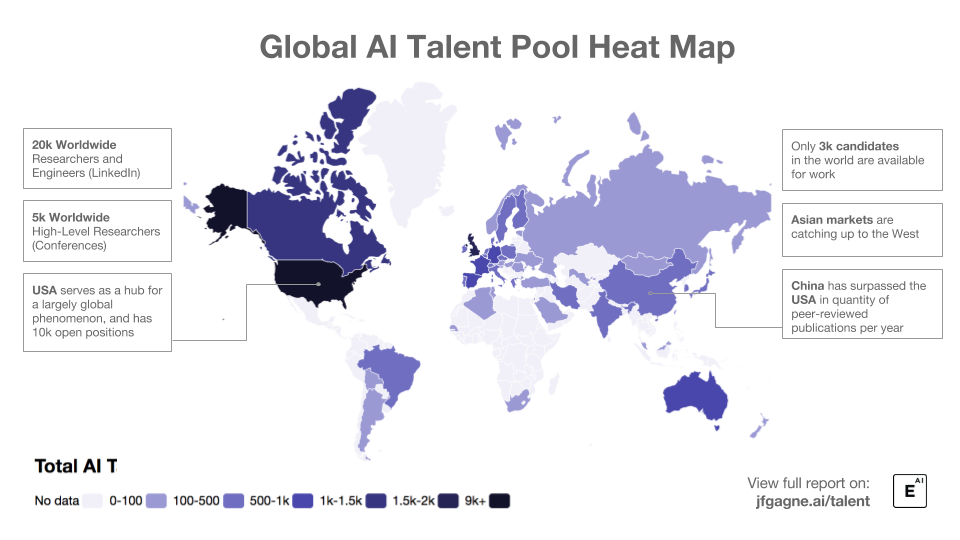

AI Isn’t Flat. Neither Is the Talent Map

One of the most overlooked second-order effects?

AI is creating more unevenness in talent value.

Good offshore engineers who get AI tools and can think across the stack?

Their value is going up, not down.

Because here’s the paradox:

AI makes mid engineers look senior...

...but it also exposes mid seniors who never really leveled up.

You can’t hide behind a Jira ticket anymore.

Your work is agent-visible. System-visible.

You’re part of a living feedback loop.

At 1985, we’re already retooling how we hire and train for this new looped environment.

Everyone gets onboarding on LangChain, TypeScript agent scaffolding, and prompt tracing tools.

Not as a shiny side project.

As core infra.

What Most Forecasts Miss

They stop at the first bounce.

But systems thinking says:

- AI doesn’t replace work. It moves it.

- Agent logic shifts bottlenecks, doesn’t erase them.

- Integration, compliance, and orchestration now matter more than greenfield code.

The orgs that win will be the ones who see these second-order effects early and plan their resourcing loops accordingly.

Not with headcount.

With capacity agility.

With cross-skill fluency.

With partners who don’t just write code, but model systems.

Like us.

Curious how second-order thinking might reshape your AI hiring strategy? Or want to sketch out your own agent-org loops? Ping me. Our Bangalore crew would love to nerd out over it.

FAQ

1. Will AI eliminate the need for offshore software development teams?

No. AI is changing the nature of software development, but it isn’t removing the need for human teams - offshore or otherwise. While AI can automate certain tasks, it creates new layers of complexity in orchestration, testing, compliance, and system maintenance. Offshore teams that adapt to these emerging demands - especially those with systems thinking and cross-functional expertise - remain essential.

2. What is second-order thinking, and why does it matter for AI and offshoring?

Second-order thinking is the practice of looking beyond immediate effects to consider the longer-term and indirect consequences of a decision or trend. In the context of AI and offshoring, it reveals that the first-order assumption (“AI reduces dev needs”) misses the systemic ripple effects - like increased need for agent orchestration, QA oversight, and compliance layering - which actually drive new demand for skilled offshore support.

3. How does AI agent adoption impact team structures in software development?

AI agents often automate individual tasks, but they introduce complexity at the system level. This shifts the bottlenecks from writing code to areas like managing agent behavior, ensuring output accuracy, and maintaining system coherence. As a result, team structures are becoming leaner but more multidisciplinary, often relying on offshore partners to handle integration, governance, and human-in-the-loop quality control.

4. What types of offshore roles are growing because of AI, not despite it?

Roles in QA automation, agent orchestration, AI-augmented DevOps, compliance engineering, and prompt lifecycle management are seeing increased demand. Teams that can validate AI-generated code, audit decision paths, and build override/fallback systems are becoming indispensable. These are not commodity roles - they require contextual judgment and a deep understanding of both systems and the tools in play.

5. Why is orchestration considered the new bottleneck in AI-augmented teams?

With multiple AI agents running tasks in parallel - code generation, test writing, workflow routing - the biggest challenge becomes coordination. Orchestration ensures these tools interact correctly, don’t conflict, and produce reliable results. It requires engineering oversight, decision trees, state management, and rollback planning - all of which demand developer time and architectural foresight.

6. What are the second-order risks introduced by AI agents in development?

Key risks include opaque decision-making (AI models often lack auditability), hallucinated logic in code, compliance violations, and unexpected interactions between multiple agents or tools. These issues can cascade quickly if unchecked. To mitigate them, teams must invest in traceability tools, manual validation layers, and redundancy plans - often led by experienced offshore engineers with system debugging expertise.

7. How should companies rethink capacity planning in light of AI tools?

Instead of planning purely by headcount or full-time equivalents (FTEs), companies need to model workflows as dynamic systems. AI shifts the location of effort but doesn’t eliminate it. Capacity should be framed around modular units that can flex with changing workloads - like temporary offshore squads for integration work, audit tasks, or test generation - rather than static long-term roles.

8. Does AI reduce the importance of software quality assurance (QA)?

Not at all - if anything, it heightens it. AI-generated outputs still require validation, and bugs introduced by AI can be harder to detect. QA is evolving from manual test execution to agent-assisted test creation, synthetic test data modeling, and human-in-the-loop verification. Offshore QA engineers skilled in this hybrid approach are becoming vital to ensuring AI doesn’t degrade quality.

9. What distinguishes a future-ready offshore partner from a traditional one?

A future-ready offshore partner understands that AI is not just a tool - it’s a systems shift. They bring fluency in agent lifecycle management, AI-integrated DevOps pipelines, prompt testing, compliance-aware engineering, and modular capacity delivery. They also emphasize onboarding in new agent tooling (like LangChain, semantic routing, and LLM monitoring) and can embed themselves into fast-evolving workflows without slowing down the product cadence.

10. How should CTOs prepare their organizations for second-order effects of AI adoption?

CTOs should shift their planning lens from cost-saving to system-shaping. This includes mapping the feedback loops AI creates, identifying new failure modes (e.g., agent misalignment, test brittleness), and building sourcing strategies that emphasize agility and depth. That means investing in partners who don’t just code to spec, but who co-own the systems logic - and can step in across testing, compliance, orchestration, and post-agent maintenance.