Top Programming Languages for AI in 2025: Strengths, Ecosystems & Use Cases

The AI stack got crowded. We break down who’s good at what in today’s coding league.

No, Python didn’t retire - but the AI party got a lot more crowded

Remember when AI meant Python or bust? Cute. In 2025, we’re past the “just use TensorFlow” stage and deep into a multilingual, multi-paradigm arms race. Each programming language now brings a unique superpower to AI development - whether you're fine-tuning a 13B model or shipping an LLM agent that writes Jira tickets (and maybe closes them, if you’re lucky).

The hype still flows like Red Bull at a hackathon, but beneath it, real patterns are emerging. So let’s cut through the slide decks and get tactical: what languages are actually delivering in AI right now - and where?

Python Is Still the GOAT (But It’s Not a Monopoly Anymore)

If you’re building AI in 2025, chances are your stack still has a Python heartbeat. It's the lingua franca of AI for a reason:

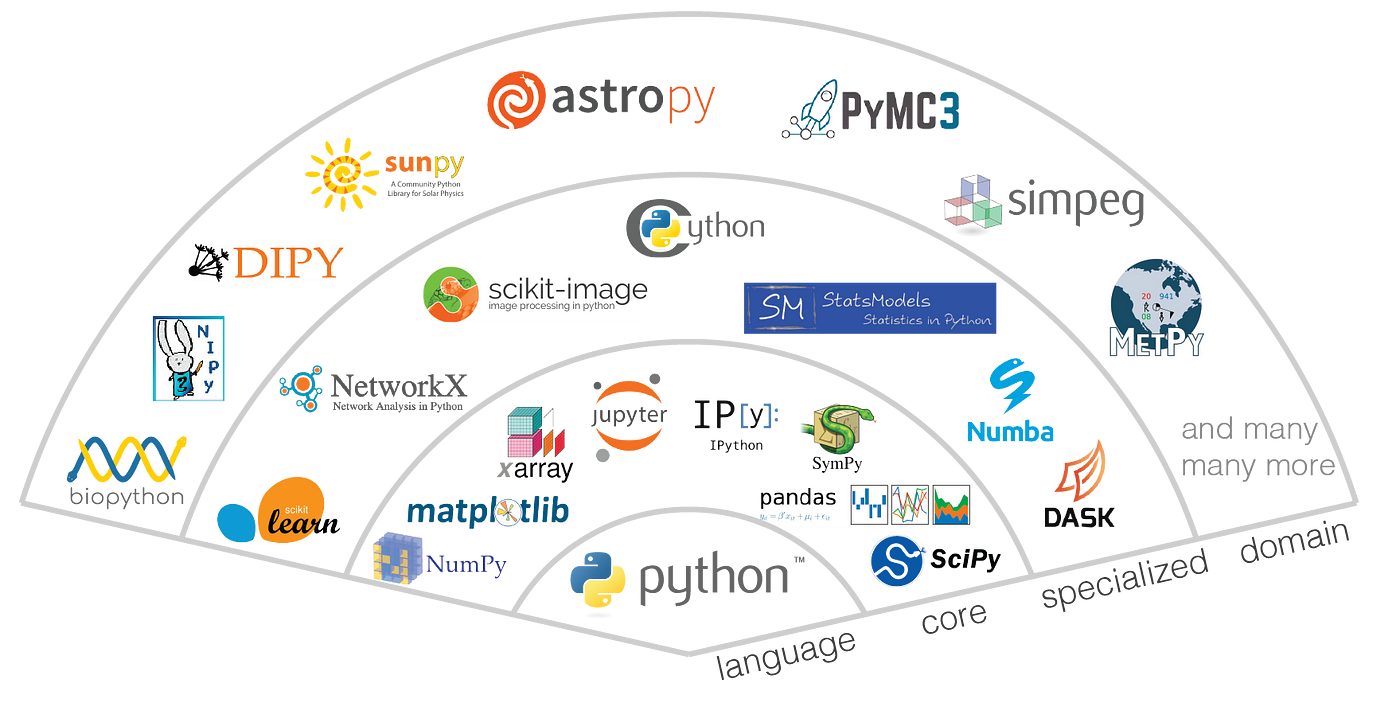

- Ecosystem gravity: PyTorch, TensorFlow, Hugging Face Transformers, scikit-learn… they all speak Python fluently.

- Data ops: Pandas, NumPy, Matplotlib are still table stakes.

- AI agents: LangChain, Haystack, Autogen - the rapid prototyping layer is deeply Pythonic.

But here’s the twist: Python’s GIL (Global Interpreter Lock) is still a party pooper for concurrency. And Python isn’t exactly loved in latency-critical environments. So while it's unbeatable for R&D and glue logic, it’s losing ground on production inferencing and systems-level orchestration.

Reality check: Python’s ceiling isn’t its syntax - it’s where performance and packaging matter. That’s where other languages sneak in.

C++ and Rust: The Performance Backbone

Let’s talk speed demons.

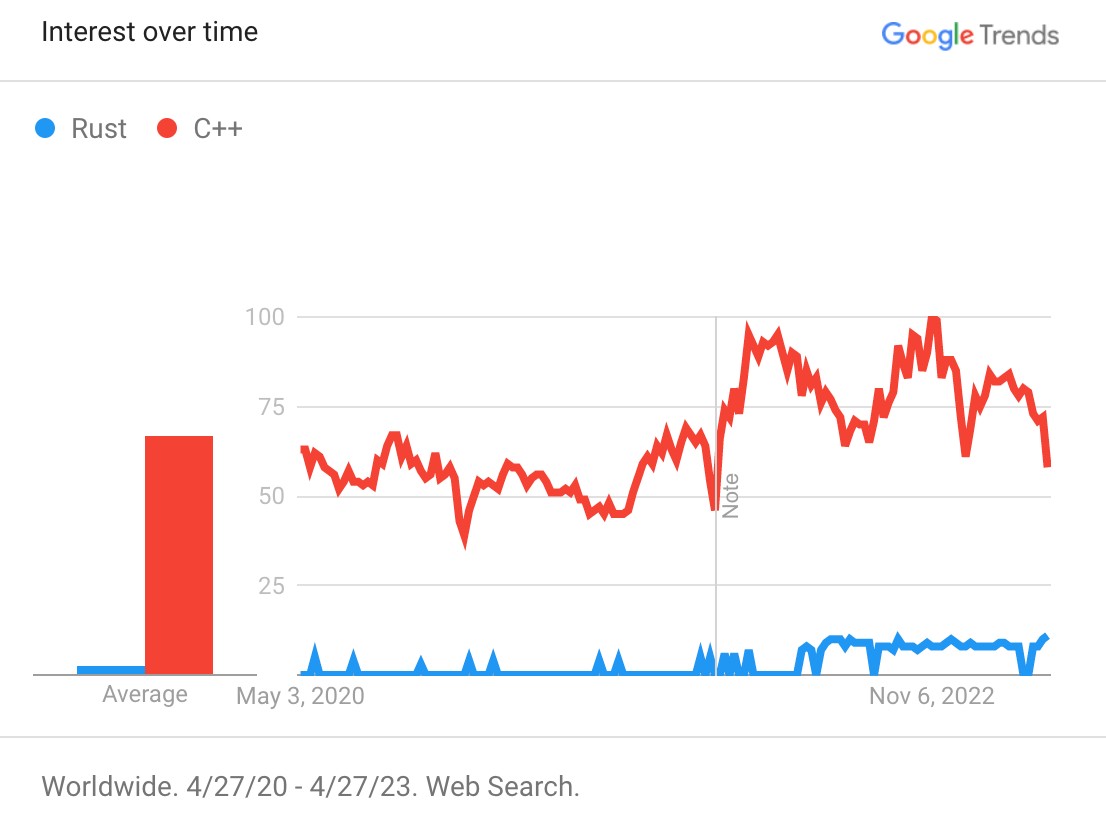

C++ continues to be the bedrock for ML frameworks. PyTorch’s core is in C++. Most GPU kernels? CUDA/C++. If your AI workload needs to fly - e.g., real-time vision for autonomous drones - C++ still gets the nod.

Rust, on the other hand, is C++ without the footguns. In 2025, it’s the darling of systems engineers building AI infrastructure:

- Safety and concurrency: Rust’s ownership model makes memory bugs almost cute in hindsight.

- Model deployment: Libraries like

tract,tangram, and the increasingly capableburnframework are helping Rust punch above its weight in inference-heavy environments.

Rust isn’t replacing Python. But when you need to serve a quantized model in under 10ms with minimal RAM on edge hardware? Rust’s your friend.

Verdict: Python builds it. Rust ships it.

JavaScript (and TypeScript): The AI Frontend Awakens

Yes, you read that right.

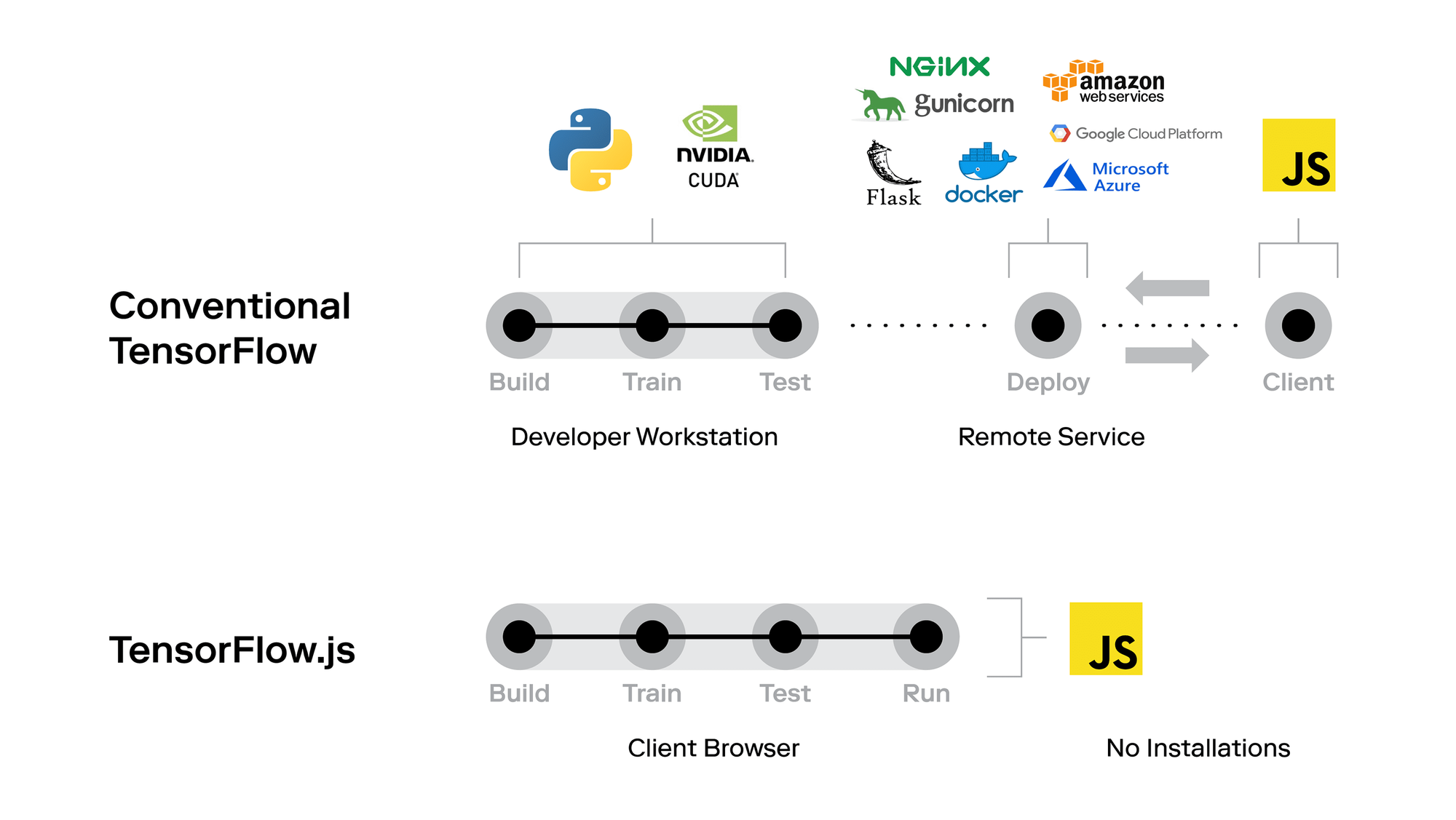

While backend AI gets the glory, JavaScript and TypeScript are quietly powering AI’s frontend revolution:

- On-device inferencing: TensorFlow.js and ONNX.js allow small models to run in-browser with zero server round trips.

- UI for agents: AI copilots need slick, responsive interfaces - and React (with TypeScript) still rules that world.

- DevX for SDKs: Building an AI product? Your TypeScript SDK will probably ship before your Python wrapper is production-grade.

Also: every LLM chatbot eventually becomes a web app. And guess what? That glue code ain’t Python.

Use cases: Agent dashboards, LLM playgrounds, educational tools, lightweight summarizers inside Chrome extensions.

Sneaky trend: Prompt engineers writing inference flows directly in TS, wrapping APIs like OpenRouter or Together.ai with custom logic and UI.

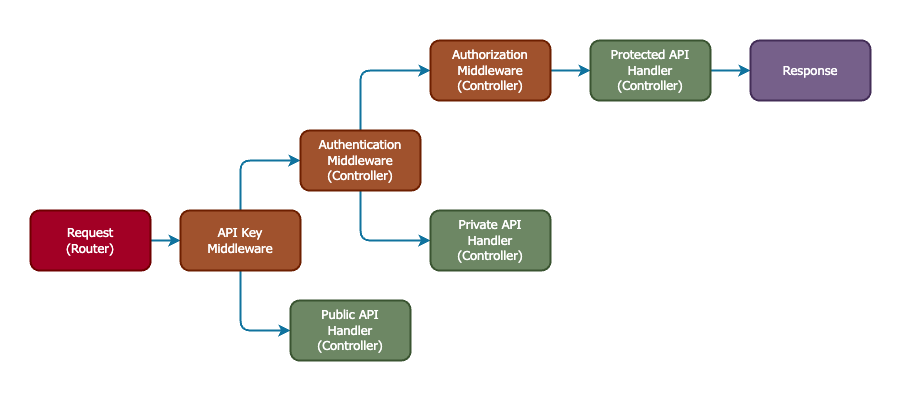

Go and Java: AI’s Middle Managers

They’re not sexy, but they scale.

Go is showing up in inference serving (see: BentoML, Ray Serve with Go workers), MLOps infra, and backend orchestration. Devs love it for its simplicity, speed, and Docker-friendliness.

Java is still a force in enterprise NLP and fraud detection, especially in finance and telco. Thanks to JVM-based libraries like DL4J and support in Apache Spark MLlib, Java remains relevant - just not trendy.

Where they shine:

- High-volume API endpoints for model inferencing

- Internal risk-scoring models

- Microservices that call AI models (rather than train them)

They won’t win Hackathons, but your VP of Engineering trusts them with actual SLAs.

Julia: Math Crush That Never Turned Serious?

Every year someone says Julia is about to break out. And every year, it almost does.

Julia’s promise is still tantalizing: fast like C, easy like Python, math-native like MATLAB. In 2025, it’s matured nicely, with better package stability, multiple dispatch magic, and serious uptake in:

- Scientific computing

- Probabilistic programming (via Turing.jl)

- Differentiable physics and symbolic AI

But Julia remains niche. If you’re building a recommendation system or shipping to a cloud endpoint, Python still gets there faster.

Still - if you’re a quant, climate modeler, or doing symbolic regression at 3 a.m., Julia is your playground.

Mojo: The Dark Horse with Compiler Juice

If Python and C++ had a brilliant lovechild, it might look like Mojo.

Created by Modular, Mojo is:

- Python-compatible at surface level

- But compiled, statically typed, and insanely fast under the hood

In 2025, it’s still early days, but Mojo is already gaining traction for:

- Custom model kernels

- Edge ML workloads

- AI compiler pipelines

It’s not replacing Python yet - but if you’re hitting performance ceilings and don’t want to rewrite everything in Rust or C++, Mojo might be your golden path.

Think of it as: I love Python, but I wish it could deadlift more.

Swift: AI’s iOS Whisperer

Yes, Swift.

For mobile-native AI experiences - like on-device summarization, personalized keyboards, or fitness tracking via pose estimation - Swift + Core ML is still the go-to.

Apple’s AI push in 2025 (Vision Pro SDKs, on-device LLMs, etc.) means Swift is more relevant than ever for devs building Siri 3.0-style experiences.

Also, with mlx (Apple’s new ML framework), Swift is getting some real AI power, not just wrapper glory.

Who Wins Where?

Let’s recap this multilingual mosh pit with a quick scorecard:

| Language | Strengths | Best For |

|---|---|---|

| Python | Libraries, community, flexibility | R&D, agents, rapid prototyping |

| Rust | Safety, performance | Edge AI, inferencing, infra tooling |

| C++ | Speed, GPU-native | Model internals, real-time vision |

| TypeScript | UX, glue logic, SDKs | Frontend agents, in-browser AI |

| Go | Simplicity, concurrency | Inference serving, MLOps infra |

| Java | Enterprise-grade JVM ecosystem | Risk scoring, Spark pipelines |

| Julia | Math modeling, symbolic AI | Scientific AI, optimization problems |

| Mojo | Python-like, compiled performance | Kernel dev, edge deployment, compiler chains |

| Swift | Mobile, Apple’s AI stack | On-device apps, wearables, Vision Pro |

Ship / Skip in 2025

Ship It If:

- You’re in AI R&D → Python is still your daily driver.

- You need prod inference under 10ms → Rust or C++.

- You’re building LLM copilots with UI → TypeScript/React.

- You’re targeting iPhones or Vision Pro → Swift all day.

- You’re compiling custom model logic → Mojo deserves a look.

Skip It If:

- You’re tempted to use Julia for your startup’s backend.

- You think Go will magically replace PyTorch.

- You’re trying to do deep learning in PHP (just... why?)

Language Matters, But So Does the Stack

In 2025, AI development is less about picking one language and more about playing conductor to an orchestra:

- Python writes the logic

- Rust handles the edge

- JavaScript renders the UI

- Mojo or C++ turbocharges the performance-critical bits

We’ve moved past the “one ring to rule them all” era. Instead, AI products now speak in dialects: model code, infra code, glue code, SDKs. If your team speaks all of them? You’re dangerous.

Want a dev team that can wrangle Rust, finesse Figma, and ship fast in Python? Our Bangalore crew at 1985 might be your multilingual mercenaries. DM me or spin up a trial sprint.

FAQ

1. Why is Python still dominant in AI development despite performance limitations?

Python remains the go-to language for AI because of its unparalleled ecosystem of libraries (like PyTorch, TensorFlow, Hugging Face) and its ease of use for rapid experimentation. While not the fastest, Python excels in developer productivity and community support. Its limitations in performance are often mitigated by C++ or Rust extensions under the hood.

2. How does Rust compare to C++ for AI infrastructure?

Rust offers memory safety and concurrency benefits without sacrificing much performance, making it a safer alternative to C++ for AI infrastructure. While C++ is still essential for GPU-accelerated workloads (e.g., CUDA), Rust is increasingly favored for building model serving backends and edge inference systems where reliability and speed are both critical.

3. Can JavaScript or TypeScript really be used for AI applications?

Yes, JavaScript and especially TypeScript are becoming central to AI product development - particularly on the frontend. Libraries like TensorFlow.js and ONNX.js allow in-browser inferencing, while TypeScript enables robust SDKs and agent interfaces. It’s less about training models and more about deploying interactive, AI-powered user experiences.

4. What is Mojo and how is it different from Python or Rust?

Mojo is a newer language that combines Python-like syntax with systems-level performance. Developed by Modular, it compiles down to performant machine code and supports advanced features like static typing and SIMD. Mojo is gaining attention for tasks like custom kernel development and edge deployment, where Python falls short and Rust may be overkill.

5. When should developers consider using Julia for AI projects?

Julia is best suited for scientific AI applications, such as optimization problems, probabilistic modeling, or differential equations. Its syntax is math-friendly and its performance rivals C, but its ecosystem is narrower. For domain-specific AI work in academia or high-performance computing, Julia is a strong contender.

6. Is Java still relevant for machine learning in 2025?

Java continues to be used in enterprise environments where JVM infrastructure is entrenched. It’s commonly seen in fraud detection, risk scoring, and large-scale data pipelines via Apache Spark. While it’s not leading in deep learning innovation, it remains stable, scalable, and integration-friendly for production systems.

7. How do AI engineers use Go in modern ML pipelines?

Go is increasingly adopted for building fast, maintainable model-serving APIs and MLOps tooling. It’s ideal for inference endpoints, orchestration layers, and data transformation pipelines due to its simplicity, concurrency support, and fast compile times. It’s rarely used to train models but excels in getting them to production.

8. What role does Swift play in AI development, especially on mobile?

Swift, combined with Apple’s Core ML and the newer MLX framework, is crucial for building AI-native iOS applications. Developers use it to run models on-device, supporting privacy and performance use cases like health tracking, voice recognition, or computer vision for wearables and AR.

9. Which language is best for building LLM agents or copilots?

Python is still the primary choice for building LLM agents due to the maturity of tools like LangChain, Autogen, and OpenAI SDKs. However, TypeScript is rapidly becoming the second language of AI agents, especially when UX, plugin interoperability, or browser-based execution is needed.

10. Will one programming language eventually dominate AI development?

Unlikely. AI in 2025 is a polyglot domain. Python dominates experimentation and agent logic, Rust and C++ power the infra and inference layers, TypeScript handles interaction layers, and languages like Mojo, Swift, and Go fill specialized niches. The best teams embrace a multi-language strategy optimized for context, not just popularity.