Why Your External Code Reviews Fail (And How to Fix Them Today)

Smart external reviews don’t happen by chance. Here’s the system we built that actually works.

CTOs love the idea. PMs nod at the checklist. But when it’s time to ship, those “extra eyes” often squint at the wrong things.

There’s this recurring fantasy in productland: you bolt on an external code reviewer - maybe a dev from another team, maybe a paid consultant - and like magic, bugs vanish, architecture shines, and your sprint velocity doesn’t even flinch.

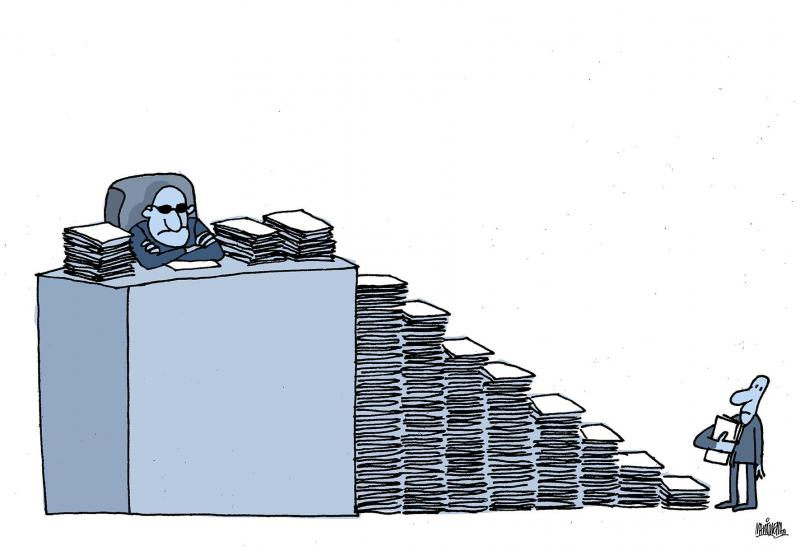

Except, in practice? External code reviews are like airport security. Lots of ceremony, very little actual catch rate. By the time feedback lands, the original dev has mentally moved on. Review comments either state the obvious or nitpick style. And the biggest architectural landmines? Still buried under a cheery “LGTM.”

We’ve run into this mess while building for fintechs, edtechs, and one particularly ambitious goat-delivery startup. The pattern repeats: good intentions, bad scaffolding. Here’s how we broke the cycle - and what you can steal.

The Drive-By Reviewer Problem

Let’s start with the obvious: external reviewers don’t live in your repo.

They’re not steeped in the why of your product. They don’t know your team's quirks, naming conventions, or which helper function you hacked together at 3 a.m. during a live-fire bug blitz. So what happens?

- They play it safe. Stick to obvious things like unused imports, missing null checks, and typos in comments.

- Or worse, they overcompensate. Suggesting sweeping refactors or architecture changes based on a 400-line diff with zero context.

Neither is helpful.

We once had an external reviewer suggest converting a simple payment microservice to event-driven architecture. Sounds fancy - until you realize it handled exactly four operations and ran fine under load. Classic case of smart-but-detached.

So how do you fix this?

Context is king. And context doesn’t come from PR titles like feature/payment-refactor. It comes from structured onboarding, scoped reviews, and tools that surface decisions before the reviewer has to reverse-engineer them from code.

Hype vs. Ship: The Checklist Lie

There’s a seductive logic to checklists. They promise repeatability. Fairness. That warm QA feeling of “we covered everything.”

But external code review checklists often read like they were written by an intern gunning for ISO 9001 compliance:

- Are functions less than 30 lines?

- Are there unit tests?

- Are exceptions handled?

- Is the code readable?

Sure, you can tick all those and still merge a ticking time bomb.

We saw this in a healthtech project where the review checklist was pristine, but no one flagged that the API accepted unbounded patient uploads. Whoops. That bug cost us three days and a very annoyed hospital admin.

What works better? Review prompts that adapt to the type of change:

- If it touches auth, ask: “What roles can now access this route?”

- If it modifies a data model: “What existing records could break?”

- If it tweaks infra: “How does this affect rollback paths?”

These aren’t checkbox items. They’re prompts for thinking. Better yet, bake them into your PR template so the original dev answers them upfront - giving reviewers a smarter jumping-off point.

The Throughput Illusion

Let’s get honest: external code reviews are often more about optics than outcomes.

They look good in audits. They soothe execs worried about tech debt. But how often do they actually catch critical issues?

We once shadowed a team where external reviewers rubber-stamped 70% of PRs in under 30 minutes. Not because the code was flawless, but because they were juggling five repos across three clients and had a Slack backlog longer than War and Peace.

The fix isn’t more review time. It’s tighter scoping.

Instead of reviewing the whole PR, reviewers can focus on:

- High-risk diffs (auth, payment, core algorithms)

- Non-trivial new patterns (custom hooks, query builders)

- Security and perf-sensitive logic

The rest? Leave it to internal reviewers or even AI copilots. Yes, they’re imperfect - but they don’t burn out or mentally check out mid-review.

The “What We Got Wrong” Confessional

At 1985, we once tried rotating external reviewers across client projects to “cross-pollinate” quality. In theory, this would reduce tunnel vision and increase shared standards.

In reality? We created a rotating cast of confused engineers.

One reviewer spent 20 minutes parsing domain logic in a logistics app - only to realize the API already had a helper that did exactly what the new code was doing. It wasn’t documented. It wasn’t obvious. But it was there.

The lesson?

External code reviews don’t fail because people are lazy or dumb. They fail because we drop them into unfamiliar terrain without a map.

So now we do three things differently:

- Every repo has a living

REVIEW_GUIDE.mdthat highlights key decisions, common traps, and do-not-touch zones. - We pair external reviewers with internal “code buddies” who can explain decisions live, not posthumously.

- We log “review regrets” - bugs or misjudgments that slipped through - into a shared doc. Every sprint, we revisit them.

It’s not glamorous. But it keeps us honest.

How to Actually Fix External Reviews

Let’s get tactical. You want your external code reviews to matter? Do this:

1. Pre-wire the reviewer

Before they open a single PR, give them:

- A Loom video of the system architecture

- A Notion page with current project goals

- Recent incidents or “gotchas” (e.g., flaky tests, silent failures)

It’s like giving a movie critic the plot before asking them to judge the twist.

2. Turn PRs into mini-narratives

Replace “Refactored UserService” with:

“We split UserService into two parts: one for auth concerns, one for profile logic. This unblocks a circular dependency with EmailService and simplifies testing. See commit abc123 for a failed experiment we rolled back.”Now the reviewer knows what to look for.

3. Apply risk-based review

Don’t treat all PRs equally. Tag them:

LOW: copy tweaks, UI changesMEDIUM: new features, perf optimizationsHIGH: auth, payments, data migrations

High-risk PRs get extra eyes. Low-risk ones might just need a Copilot-assisted lint pass.

4. Record decision debt

Whenever a reviewer asks “Why did we do it this way?”, capture the answer in your design notes or README.

You’re not documenting for now - you’re documenting for three reviews from now, when the same question rears its head.

5. Incentivize great reviews

Not with cash (though tempting), but with visibility:

- Highlight reviewers in retros

- Promote high-signal comments in Slack

- Track reviewer accuracy over time - who catches real issues vs. bikesheds

We once ran a “Bug Bounty for Reviewers” contest internally. One dev flagged a subtle data leak. Won a cake. The good kind, not just Jira points.

Code Review Red Flags

If your external code reviews show any of these patterns, fix fast.

🚩 Reviews under 3 minutes

Unless it’s a README typo, that’s not diligence - it’s a drive-by.

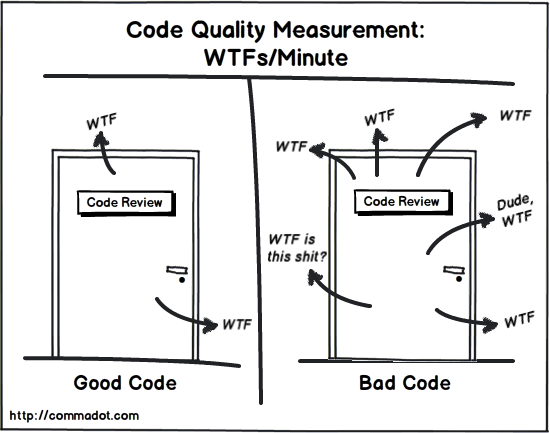

🚩 Repeated comments about style, none about logic

Lint tools exist. Reviewers should focus on thinking, not tab-widths.

🚩 PR authors preemptively say “happy to revert if needed”

That’s code for: “I’m not sure either, but YOLO.”

🚩 More comments than lines of code

Overcorrection alert. Probably someone trying too hard to prove their value.

The Wrap-Up

External code reviews can work. But only if you stop treating them like a rubber-stamp ritual and start treating them like a collaboration. They need context, constraints, and clear goals.

At 1985, we’ve face-planted enough to know what doesn’t work. And we’ve battle-tested enough process patches to know what can.

So next time you spin up an external reviewer, don’t just hand them a checklist. Hand them the story.

Want an offshore squad that ships fast and reviews smart? We’ve got devs who can write, read, and challenge your code like they own it. Ping me and let’s talk.

FAQ

1. Why do external code reviews often fail to catch critical issues?

External code reviews frequently fall short because reviewers lack deep context. They don’t fully understand domain logic, architectural decisions, or recent changes that influenced the code. Without embedded knowledge, they focus on surface-level improvements instead of systemic risks.

2. Can external reviewers be effective without full project immersion?

Yes, but only with structured onboarding. Providing architecture diagrams, review guides, recent incidents, and annotated PRs helps external reviewers build situational awareness quickly. Pairing them with internal engineers as context guides also improves outcomes significantly.

3. What kind of bugs or risks do external reviews tend to miss?

They often miss integration edge cases, misuse of shared services, improper auth handling, or performance bottlenecks - especially when those risks don’t manifest clearly in the code diff. These issues require understanding cross-cutting concerns, not just code correctness.

4. How do you structure a PR so that external reviewers can add real value?

Great PRs tell a story. They include a detailed description of the problem, why this approach was taken, risks considered, and anything that was tried and discarded. Reviewers should walk in with clear intent, not reverse-engineer it from scattered diffs.

5. What’s the best way to segment review priorities across PRs?

Use risk-based tagging. Classify PRs as low, medium, or high risk based on what they touch - auth, payments, infra, migrations. This helps reviewers focus their cognitive load where it matters most and skip nitpicks on trivial UI tweaks.

6. Are checklists helpful for external code reviews?

Yes, but only if they’re contextual. Static checklists encourage box-ticking. Adaptive prompts - like “what existing data could this break?” or “how would we roll this back?” - lead to critical thinking. The goal is review depth, not checkbox completion.

7. How should teams measure the quality of code reviews, not just their quantity?

Track review outcomes: how often reviewers catch issues that would have caused incidents, how often their suggestions lead to cleaner abstractions, and how much time PR authors spend clarifying context. High-quality reviews reduce downstream rework and incident load.

8. What tools or workflows improve external review effectiveness?

PR templates with guided questions, REVIEW_GUIDE.md files per repo, Loom explainer videos, and buddy-pairing systems all improve comprehension. Some teams use AI tools to pre-lint PRs so reviewers can focus on logic and architectural judgment.

9. What role should internal engineers play when reviews are outsourced?

Internal engineers should act as review shepherds. They provide context, answer questions quickly, and highlight which PRs truly need external scrutiny. External reviewers work best when treated as collaborators - not judges dropped in cold.

10. What’s a strong long-term strategy for improving external code review outcomes?

Build institutional memory. Log review misses and near-misses. Keep a living review guide per service. Reward reviewers who flag high-signal risks. Treat review as a product practice - not a gatekeeping task - and give it the care you’d give to testing or CI/CD.