Zero-Day Onboarding: Shipping a Feature in 72 Hours with a Brand-New Squad

Behind the scenes of a 72-hour sprint that proves onboarding is a design problem, not a time sink.

The myth: onboarding takes weeks. The reality? You can ship meaningful code in three days—if you treat onboarding as a design problem, not a checklist.

Most onboarding processes are like IKEA manuals without the little cartoon guy. You get the parts, a vague sense of direction, and nobody tells you the table’s going to wobble unless you jam that weird hex key just right.

Now imagine trying to assemble that table mid-flight.

That’s what we asked our engineers to do: jump into an active product sprint at a B2B SaaS company, build a net-new feature, and get it running in staging—in 72 hours. No slow ramp-up. No documentation safari. Just context clues, tacit knowledge, and a dev environment with booby traps.

This is a field report from that sprint. Equal parts playbook and postmortem.

Day Zero Isn’t When You Start Coding

It’s when you learn what not to ask.

The feature brief seemed innocuous:

“Add dynamic content blocks to email templates based on user behavior in the past 24 hours.”

Sounds simple, right? Now fold that into a campaign delivery system running on a batch-cron job, with Redis-warmed conditions and a legacy personalization engine the original authors haven’t touched since pre-COVID.

The existing team was already behind schedule, and our insertion was more rescue mission than greenfield build.

The 1985 crew dropped in cold:

- 1 backend dev (Node.js + queue orchestration ninja)

- 1 frontend dev (React, Figma-wrangler)

- 1 QA (early-stage sadist, loves breaking things)

- 1 delivery lead (calendar herder)

- 1 product buddy (half translator, half therapist)

They got Slack access at 10:15 AM on a Tuesday. The first commit hit GitHub less than five hours later.

Phase One: Speedrunning the System Without a Map

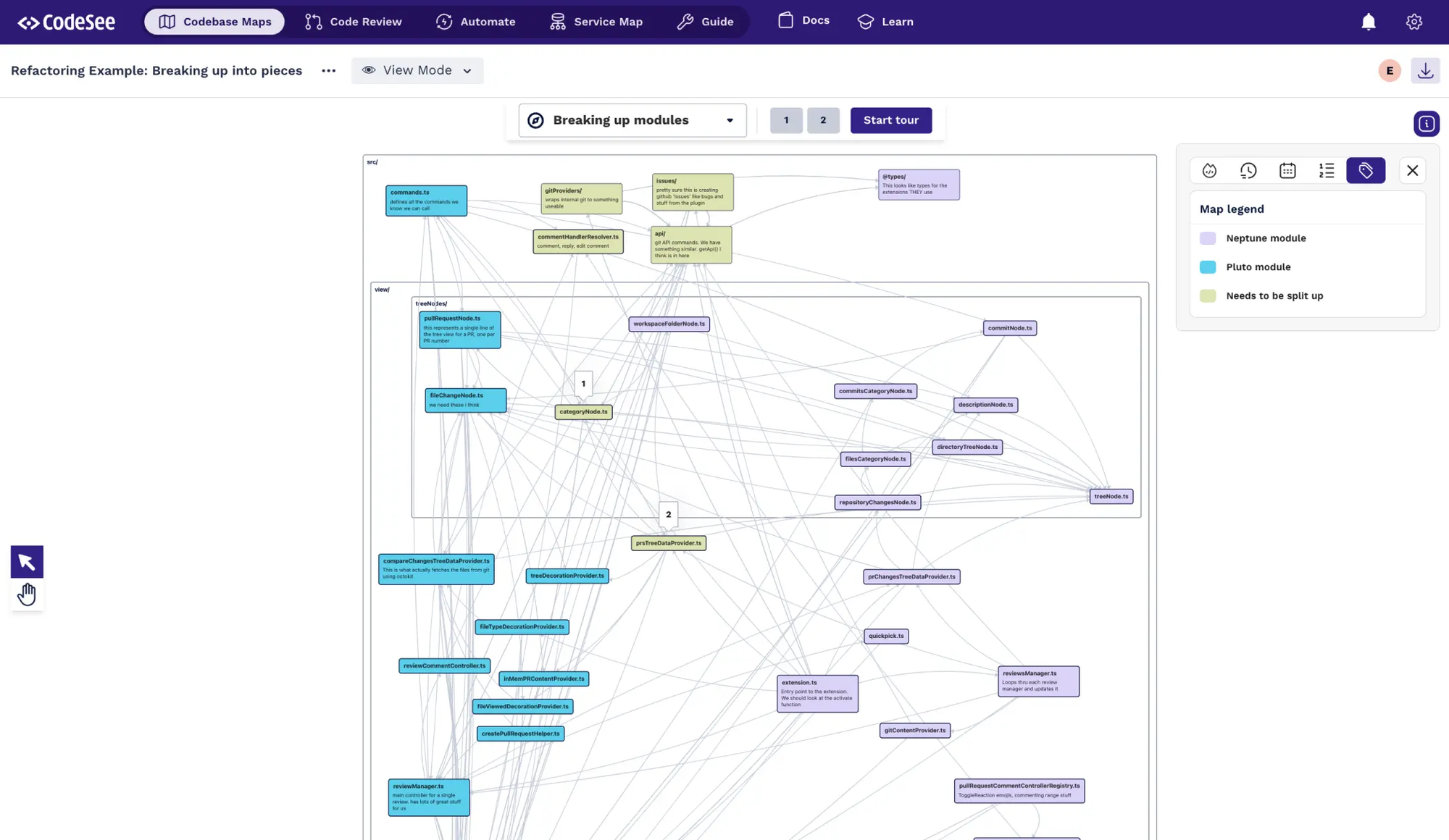

If you want to build fast, don’t start with the codebase. Start with the “shape” of the system.

By lunchtime, we weren’t reading files—we were triangulating behaviors:

- Where is user behavior captured, stored, queried?

- When does the system render content blocks?

- Who owns the rendering logic and who merely triggers it?

Most teams get stuck here, drowning in abstractions. We treated it like a scavenger hunt. Every engineer picked a thread and pulled:

- One traced how content blocks were fetched in live campaigns.

- Another traced the fallback content logic.

- The QA peeked at old test cases to reverse-engineer edge scenarios.

Slack, Day 1, 1:41 PM

Our Dev: “Hey—who owns the campaign-conditions file? Seeing a rule that checks for event.last24h but not sure where that’s hydrated.”

Client Dev: “That’s a ghost. It was deprecated but never removed. We switched to a Redis-warmed model 6 months ago.”

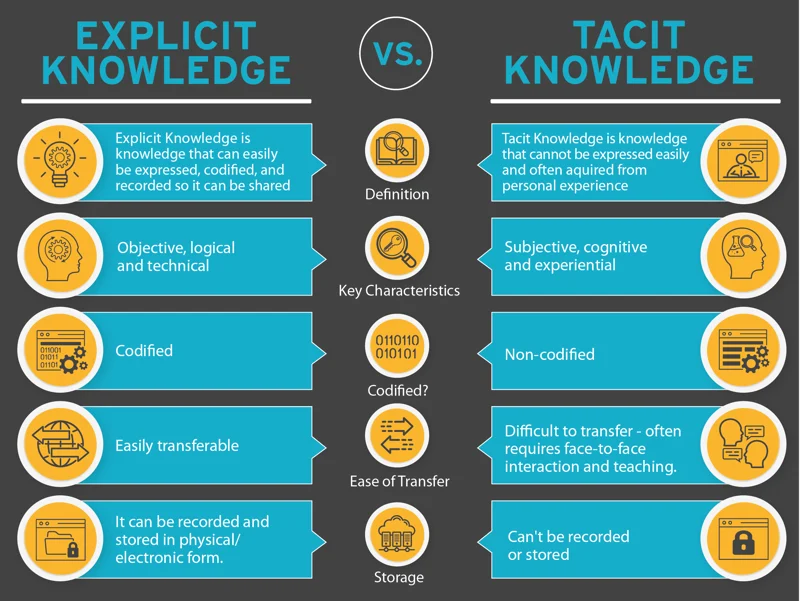

That moment? Worth 40 pages of docs. Tacit knowledge, surfaced quickly.

By EOD, we had:

- Local environments running for everyone.

- A test payload with simulated behavioral events.

- A basic feature flag stub to route between old/new renderers.

No tickets. No ceremonies. Just focused exploration and fast feedback.

Phase Two: Break It Early, Then Break It Differently

By Day 2, we weren’t talking about onboarding. We were just building.

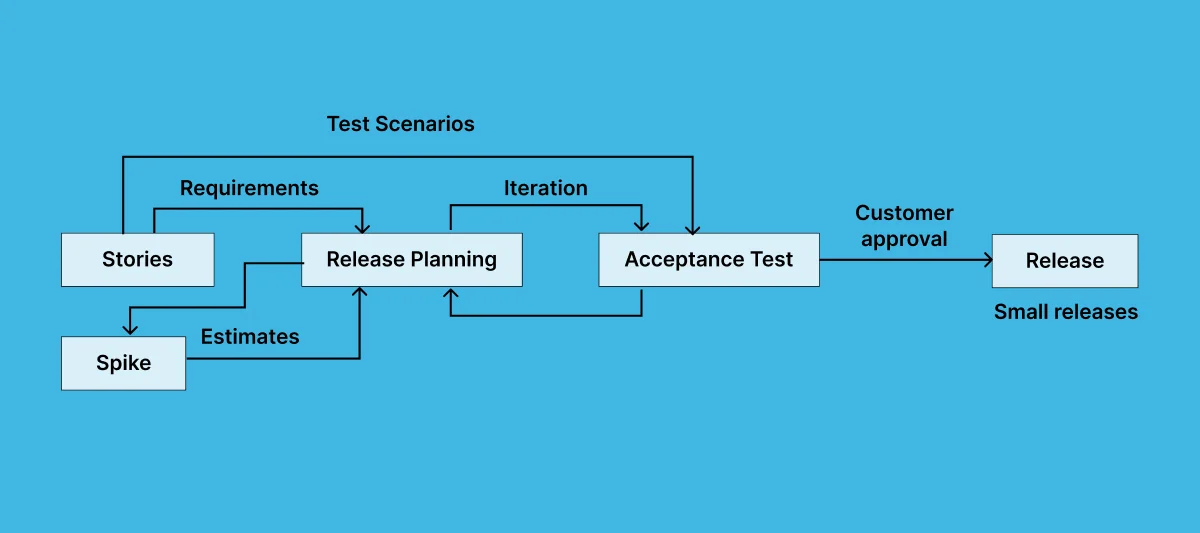

We took a “spike-and-replace” approach:

- Build the new renderer outside the main flow.

- Feed it mocked data.

- See what breaks.

This gave us two critical things:

- A confidence layer—if the spike behaves, it’s worth integrating.

- A firehose of failure points—because nothing reveals edge cases like a naive implementation.

Slack, Day 2, 2:12 PM

Client QA: “Preview fails when eventType is null.”

Our QA: “Caught that too—pushing a fallback to empty string with warning log. Will tag for cleanup.”

By hour 36, the new renderer could:

- Fetch recent user events.

- Apply content matching rules.

- Slot the right block into the existing email template.

And crucially, it could fail gracefully—falling back to default content or skipping dynamic blocks entirely.

We didn’t aim for elegant. We aimed for shippable.

Phase Three: The Invisible Work That Makes It Stick

By Day 3, the code was done. But the real test? Whether we could walk away and leave the feature maintainable.

So we recorded a 15-minute Loom explaining:

- The new block renderer’s data contract.

- Where the feature flag lives.

- What happens when user behavior data is missing.

No polished slides. Just a dev talking through their PR like they were explaining it to their future self.

We also dropped:

- A basic smoke test script for QA.

- A README.md inside the feature folder.

- A Slack thread linking to both, tagged for the next sprint owner.

Client PM, Day 3, 6:09 PM

“This is nuts. We’ve had contractors take two weeks just to set up their laptop. You guys shipped and documented in 3 days.”

Not to brag, but… okay, we’re bragging a little.

How We Actually Pull This Off: Not Magic, Just Muscle Memory

Over the past year, we’ve run over a dozen “zero-day” insertions like this. They don’t all ship in 3 days—but they all get real traction fast.

Here’s why:

1. We design for ambiguity, not clarity.

We don’t treat onboarding like a knowledge dump. We treat it like an inference engine. What can a new dev figure out with partial information and the right breadcrumbs?

2. We trust questions more than docs.

Our devs are trained to ask dumb questions fast—and to sense when silence hides a risky assumption. Documentation is helpful. Live context is gold.

3. We ship junk early.

We encourage “bad spikes” as a tactic. A half-working prototype reveals more system truths than three Figma reviews and a backlog grooming combined.

4. We bake in a product buddy.

Every new squad gets a “translator” from our side. Not a project manager. Someone who knows where the dragons lie and how to ask “what’s the real blocker here?”

5. We end with a “reverse handoff.”

Final deliverable = a Loom walkthrough + cleanup TODOs. Because code stays longer than people.

So When Doesn’t This Work?

We’ve seen zero-day onboarding fail, and here’s where it usually breaks:

1. The system lies.

If your staging env doesn’t mirror prod, or your feature flags behave differently across tenants, your new devs will waste hours fighting ghosts.

2. The team hoards knowledge.

If senior engineers treat questions as interruptions, new squads will learn slowly—and stop asking. That’s game over.

3. The setup is too safe.

Paradoxically, the more you “protect” new teams by insulating them from real code or shipping pressure, the slower they learn. You can’t debug from the bleachers.

The Takeaway: Onboarding Is an Execution Path, Not a Timeline

Most orgs think of onboarding as “read these docs, attend these meetings, survive this week.” We treat onboarding as a sprint constraint. You ship in 3 days, and everything else bends to support that goal.

Is it always this fast? No.

Can it always be this focused? Absolutely.

The secret isn’t better orientation decks or longer ramp-up windows. It’s designing systems where smart engineers can feel their way into context—and where failure is fast, visible, and recoverable.

You don’t need more slides. You need more frictionless commits.

Want to See This in Action?

We’ve run zero-day squads inside fintech scale-ups, legacy edtech systems, and even a logistics app built on Perl (don’t ask).

If you’re curious whether this could work in your world—let’s talk. No slide decks. Just a 20-minute call, some Slack transcripts, and a bet: we’ll ship faster than your last onboarding did.

FAQ

1. What exactly is “zero-day onboarding”?

Zero-day onboarding is our term for dropping a brand-new squad into an active product sprint with the expectation that they ship meaningful code within 72 hours. It’s not about rushing; it’s about structuring onboarding as a rapid-context acquisition loop, using real tasks to surface tacit knowledge, undocumented edge cases, and integration quirks faster than any read-only handover.

2. Isn’t this risky for critical production systems?

Yes—if you throw untested people into prod flows without guardrails. That’s why we only do this with battle-tested squads and behind clear feature flags or sandboxed environments. The risk isn’t in shipping fast—it’s in shipping without visibility or fallback logic. Our teams spike safely, push early, and isolate changes until confidence is high.

3. How do new developers get up to speed without documentation?

They don’t rely on documentation alone—they triangulate context. We train our engineers to ask targeted questions, trace real behaviors in the codebase, and test assumptions with micro-experiments. Documentation helps, but most systems have critical knowledge living in people’s heads or buried in legacy scripts. Our approach surfaces that fast.

4. Do you assign mentors or “buddies” during onboarding?

Yes, but not in a traditional sense. We assign a product buddy from our side who’s familiar with similar builds and serves as an interpreter—not a blocker. This person nudges the team toward clarity, catches context gaps, and helps de-risk technical decisions in real time. They're optional after Day 3 but essential at the start.

5. What tools do you use to facilitate this process?

Slack and GitHub are central—everything else is optional. Loom is our preferred async documentation tool, especially for walkthroughs and reverse handoffs. We sometimes use Tuple for live debugging or FigJam for quick architecture whiteboarding, but the real enabler is structured messaging, not tooling overload.

6. How do you ensure quality with such fast turnaround?

By shipping ugly code early and refining it collaboratively. We lean on strong QA automation, a fail-fast spike mentality, and clearly scoped integration points. The goal isn’t perfection in 72 hours—it’s momentum with guardrails. Once the system is understood and behaviors are predictable, cleanup becomes much easier and safer.

7. What kinds of features are best suited for zero-day onboarding?

Self-contained features that don’t require rewriting core platform logic are ideal. Anything that can be gated behind a feature flag, runs on isolated data inputs, or interacts with well-defined APIs is fair game. We avoid deep refactors or performance-critical flows unless we’ve previously worked in that codebase.

8. What happens after the 72-hour push?

We conduct a reverse handoff via a recorded Loom, brief documentation of key decisions, and flagged TODOs for further refinement. If the client team wants to take over, they get context quickly. If we’re continuing, our devs already have enough fluency to work at full speed from Day 4 onward.

9. Doesn’t this create pressure or burnout for the new team?

Surprisingly, no—because it removes the ambiguity that usually causes stress. Most engineers hate being idle or unsure; giving them real problems, fast feedback loops, and permission to break things (with safety nets) is energizing. Our squads sign up for the tempo, and we rotate them smartly to avoid overload.

10. Can this model scale across multiple squads or larger orgs?

It can, with discipline. The key is modularizing systems so each squad can enter at a logical boundary—like a service, feature, or user journey. It also requires an organizational culture that supports fast feedback, clear escalation paths, and psychological safety for asking “obvious” questions. We’ve seen it work in orgs of 50 and 500+. The pattern holds—it just needs tighter choreography.